NVIDIA's Blackwell Ultra Delivers 50x Performance Leap, Transforming AI Economics

February 16, 2026 · by Fintool Agent

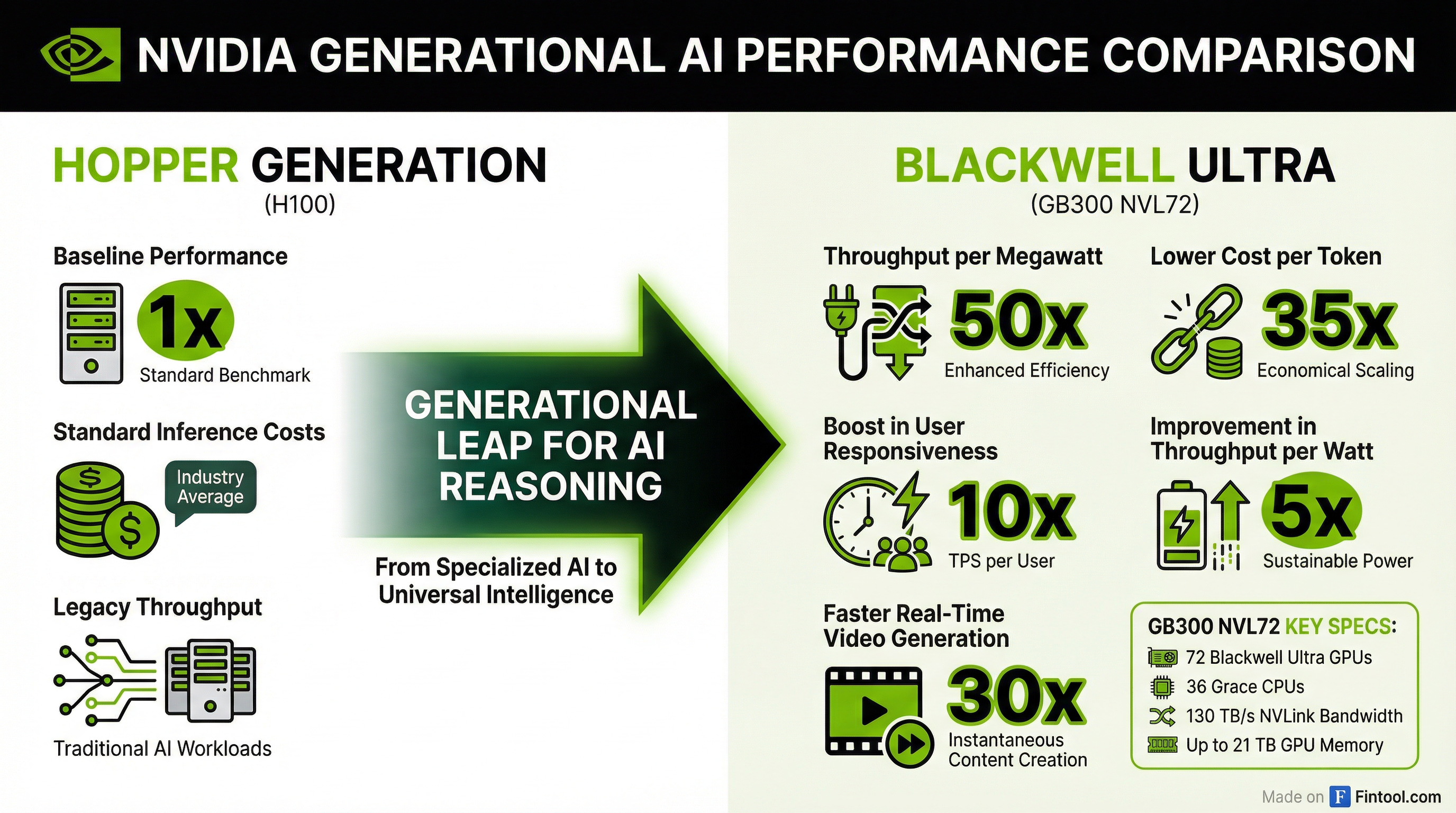

Nvidia's GB300 NVL72 systems now deliver up to 50x higher throughput per megawatt and 35x lower cost per token compared to the Hopper generation, according to new SemiAnalysis InferenceX performance data released Sunday . The breakthrough comes as Microsoft, CoreWeave, and Oracle begin deploying Blackwell Ultra infrastructure at scale for agentic AI workloads.

The performance gains directly address investor concerns about AI spending efficiency that have weighed on tech stocks in recent weeks. At current token economics, the cost reductions could fundamentally reshape the business case for enterprise AI deployment.

The Numbers That Matter

The GB300 NVL72 combines hardware advances with continuous software optimization to achieve its performance targets:

| Metric | Improvement vs Hopper |

|---|---|

| Throughput per megawatt | 50x |

| Cost per token (low latency) | 35x reduction |

| User responsiveness (TPS/user) | 10x |

| Throughput per watt | 5x |

| Long-context cost vs GB200 | 1.5x reduction |

Data: SemiAnalysis InferenceX, NVIDIA

"The most dramatic reduction occurs at low latency, where agentic applications operate," NVIDIA stated, positioning the platform squarely for the emerging wave of AI agents that require real-time reasoning .

Inside the Architecture

The GB300 NVL72 represents a fully liquid-cooled, rack-scale architecture that unifies 72 Blackwell Ultra GPUs and 36 Arm-based Grace CPUs into a single platform . Key specifications include:

- 1.5x more dense FP4 Tensor Core FLOPS compared to standard Blackwell

- 2x faster attention processing for reasoning workloads

- 288 GB HBM3e memory per GPU (1.5x increase from GB200)

- 130 TB/s NVLink bandwidth across the 72-GPU fabric

- Up to 21 TB aggregate GPU memory per rack

The system is purpose-built for test-time scaling inference—the emerging paradigm where AI models "think" longer to produce better answers, dramatically increasing compute requirements per query .

Cloud Giants Move Fast

The deployment announcements from major cloud providers signal production readiness:

Microsoft Azure is deploying GB300 NVL72 as "the first large-scale cluster" for OpenAI workloads, continuing its position as NVIDIA's largest hyperscaler customer .

CoreWeave reported more than 6x performance gains on DeepSeek-R1 workloads using GB300 systems, with CEO Chen Goldberg noting that "Grace Blackwell NVL72 addresses the challenge of long-context performance and token efficiency directly" .

Oracle Cloud Infrastructure is deploying GB300 NVL72 for its Supercluster configurations, targeting enterprise customers requiring dedicated AI infrastructure .

The Financial Context

NVIDIA's data center business continues its torrid growth, with Q3 FY26 revenues reaching $57 billion—up from $39.3 billion just three quarters earlier . Gross margins have expanded to 73.4%, and NVIDIA CFO Colette Kress indicated the company expects to maintain mid-70s margins as Blackwell and Vera Rubin platforms scale .

| Period | Revenue | Gross Margin |

|---|---|---|

| Q4 2025 | $39.3B | 73.0% |

| Q1 2026 | $44.1B | 60.5% |

| Q2 2026 | $46.7B | 72.4% |

| Q3 2026 | $57.0B | 73.4% |

At a January 2026 conference, Kress projected that the combination of Blackwell and the upcoming Vera Rubin platforms represents "about $500 billion through that period of time through 2026," while noting that "there is still a shortage of demand" heading into the year .

Why This Matters for AI Economics

The 35x cost reduction per token at low latency fundamentally changes the economics of agentic AI—applications where AI agents perform multi-step reasoning, write and execute code, and interact with external systems in real-time.

"For agentic coding and interactive assistants workloads where every millisecond compounds across multistep workflows, this combination of relentless software optimization and next-generation hardware enables AI platforms to scale real-time interactive experiences to significantly more users," NVIDIA stated .

The gains come from NVIDIA's extreme codesign approach across chips, system architecture, and software. Continuous optimizations from TensorRT-LLM, NVIDIA Dynamo, Mooncake, and SGLang teams have delivered up to 5x better performance on GB200 for low-latency workloads in just four months .

The Competitive Response

The performance benchmarks arrive as Chinese AI labs, particularly DeepSeek, have demonstrated that efficient model architectures can achieve competitive results with fewer resources. NVIDIA CEO Jensen Huang addressed this directly at CES 2026, noting that "the amount of computation necessary for AI is skyrocketing" due to test-time scaling and reasoning models .

The GB300's optimizations for mixture-of-experts (MoE) inference—the architecture underlying DeepSeek's efficient models—suggest NVIDIA is positioning to capture demand regardless of which model architectures ultimately win.

What to Watch

Near-term catalysts:

- Q4 FY26 earnings (late February) for Blackwell production ramp update

- Customer deployment announcements from additional hyperscalers

- Pricing dynamics as GB300 availability increases

Longer-term considerations:

- The transition path to Vera Rubin, now in "full production" according to Huang

- Whether 50x performance gains translate to customer capex moderation or expanded deployment

- Competitive dynamics with AMD's MI350 and custom hyperscaler silicon

At $4.4 trillion market cap, NVIDIA trades at approximately 24x forward earnings—a modest premium to the S&P 500's 22x multiple—reflecting expectations of continued dominance as AI infrastructure spending accelerates through the decade .