Samsung Begins World's First HBM4 Mass Production for Nvidia's Vera Rubin

February 8, 2026 · by Fintool Agent

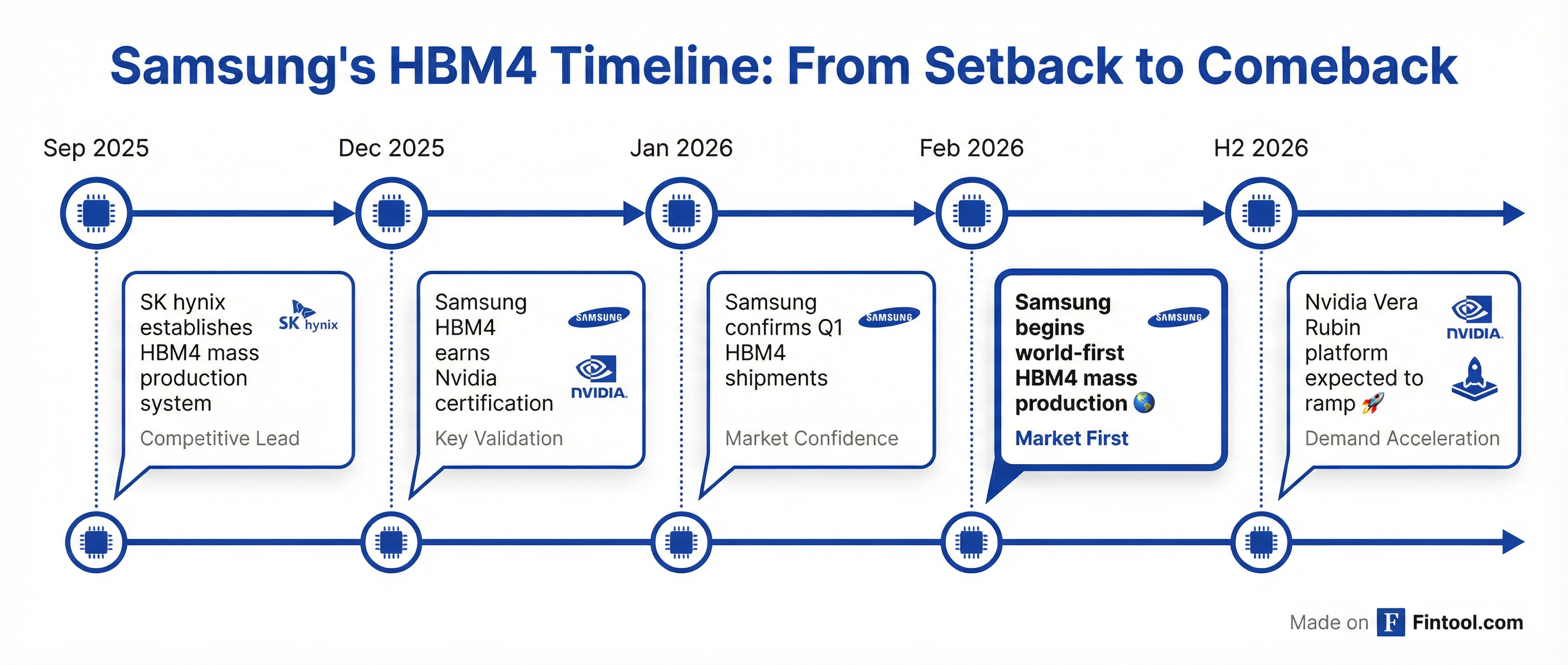

Samsung Electronics will begin the world's first mass production of sixth-generation high-bandwidth memory (HBM4) chips next week, marking a critical milestone in the Korean giant's comeback bid for the AI memory market. The chips are destined for Nvidia's+1.43% next-generation Vera Rubin AI accelerators, expected to ramp in the second half of 2026.

The move represents Samsung's redemption arc after struggling with HBM3E qualification issues that allowed rival SK hynix to dominate the AI memory market. Samsung has now passed Nvidia's rigorous quality certification process and secured purchase orders, with production schedules finalized to align with Vera Rubin's launch timeline.

The Stakes: A $100 Billion Market by 2028

The HBM market is on a collision course with explosive growth. Micron+8.02% CEO Sanjay Mehrotra recently projected the HBM total addressable market (TAM) will grow from approximately $35 billion in 2025 to $100 billion by 2028—a roughly 40% compound annual growth rate that arrives two years earlier than previously forecast.

"Remarkably, this 2028 HBM TAM projection is larger than the size of the entire DRAM market in calendar 2024," Mehrotra said on Micron's Q1 FY26 earnings call. "Memory is now essential to AI's cognitive functions, fundamentally altering its role from a system component to a strategic asset that dictates product performance."

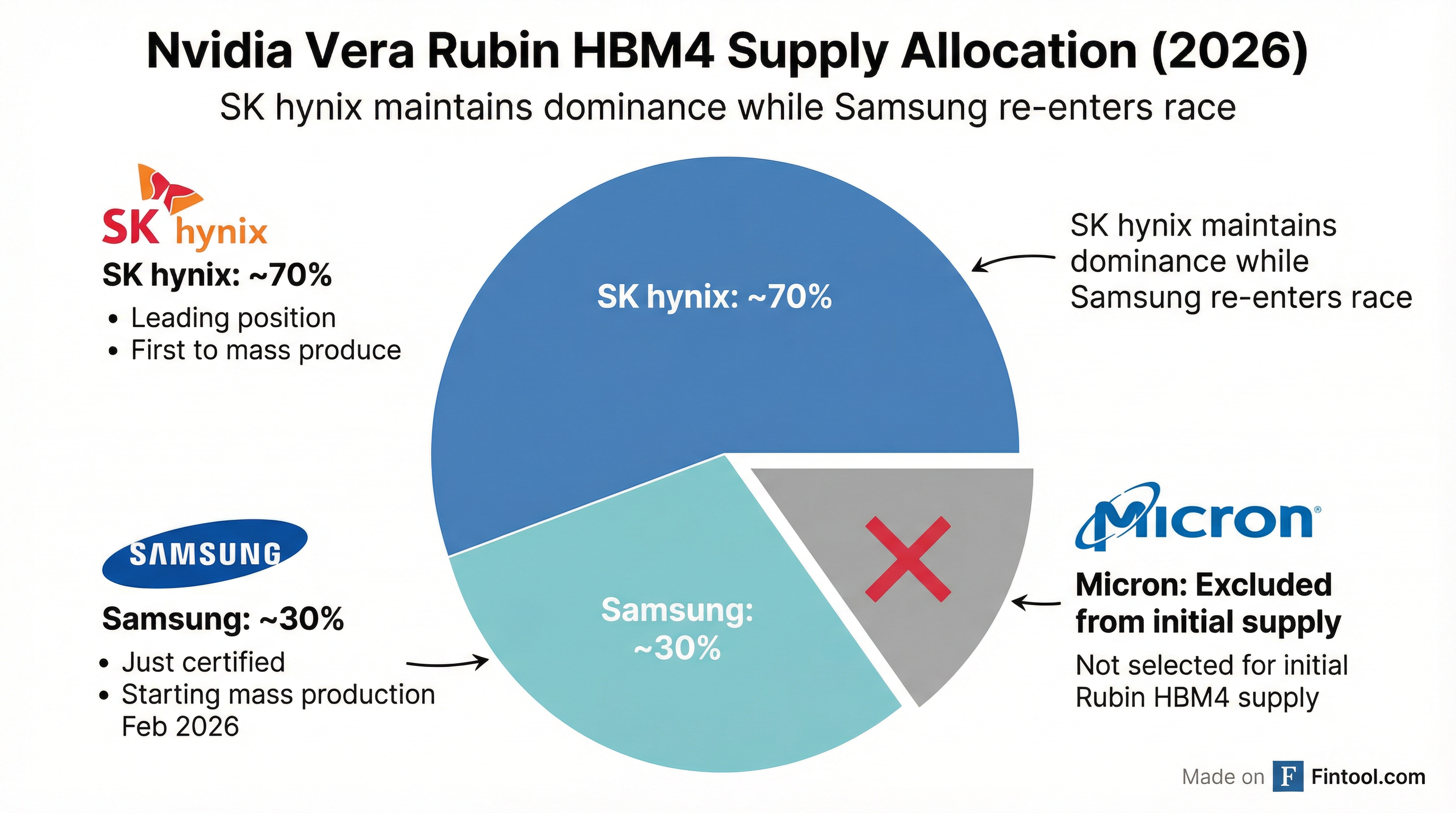

The Competitive Landscape: SK Hynix Dominates, Samsung Re-enters

While Samsung's HBM4 breakthrough is significant, SK hynix maintains the dominant position in Nvidia's supply chain. Industry sources indicate SK hynix has secured approximately 70% of Nvidia's HBM4 orders for the Vera Rubin platform—well above the roughly 50% market previously anticipated.

Samsung's approximately 30% allocation represents a meaningful comeback after being largely shut out of HBM3E supply to Nvidia. Notably, Micron+8.02% has been excluded from Nvidia's initial HBM4 supplier list after reportedly failing to meet performance requirements, despite Micron's claims of "industry-leading" HBM4 specifications.

Market research firm Counterpoint Research projects 2026 global HBM4 market share at:

| Supplier | Projected 2026 HBM4 Share |

|---|---|

| SK hynix | 54% |

| Samsung | 28% |

| Micron | 18% |

Samsung's Recovery Timeline

Samsung's HBM struggles have been well-documented. The company fell behind SK hynix in HBM3E qualification, creating an opening that its Korean rival exploited to capture dominant market share with Nvidia and other AI chip makers.

The turnaround accelerated in late 2025 when Samsung's HBM4 samples earned positive feedback from Nvidia's qualification process. In January 2026, Samsung confirmed plans to mass-produce and ship HBM4 from Q1, positioning itself for the upcoming Vera Rubin ramp.

"Samsung, which has the world's largest production capacity and the broadest product lineup, has demonstrated a recovery in its technological competitiveness by becoming the first to mass-produce the highest-performing HBM4," said an industry source familiar with the matter.

Why HBM4 Matters: The AI Infrastructure Buildout

HBM4 is critical for next-generation AI accelerators because it delivers substantially higher bandwidth and lower power consumption than current HBM3E chips. Nvidia's Vera Rubin platform, powered by seven chips, will deliver what the company describes as an "X-factor improvement in performance relative to Blackwell."

The scale of AI infrastructure investment is staggering. Nvidia CFO Colette Kress noted that analyst expectations for top cloud service providers and hyperscalers aggregate CapEx in 2026 now sits at roughly $600 billion—more than $200 billion higher than start-of-year estimates.

Nvidia has visibility to $500 billion in Blackwell and Rubin revenue from the start of 2025 through the end of calendar 2026, with the company noting demand continues to exceed expectations.

| Metric | NVDA Q3 FY26 | NVDA Q2 FY26 | NVDA Q1 FY26 |

|---|---|---|---|

| Revenue | $57.0B | $46.7B | $44.1B |

| Net Income | $31.9B | $26.4B | $18.8B |

| Gross Margin | 73.4% | 72.4% | 60.5% |

Implications for Micron

Micron's exclusion from Nvidia's initial HBM4 supply list is a setback for the U.S. memory maker, even as it continues to post strong results driven by HBM3E demand. In its Q1 FY26 earnings call, CEO Sanjay Mehrotra emphasized the company's competitive positioning and differentiated HBM4 product.

"We feel very good about our competitive position," Mehrotra said. "We feel very, very good about our product and our HBM4 product that we have highlighted as industry-leading performance over 11 gigabit per second, the best specifications in the industry."

Micron has completed agreements for its entire calendar 2026 HBM supply with other customers and expects strong year-over-year HBM revenue growth despite missing the initial Nvidia HBM4 allocation.

| Metric | MU Q1 FY26 | MU Q4 FY25 | MU Q3 FY25 |

|---|---|---|---|

| Revenue | $13.6B | $11.3B | $9.3B |

| Net Income | $5.2B | $3.2B | $1.9B |

| Gross Margin | 56.0% | 44.7% | 37.7% |

What to Watch

H2 2026 Vera Rubin Ramp: Nvidia confirmed the Rubin platform remains on track to ramp in the second half of 2026. The speed and scale of this ramp will determine how quickly Samsung's HBM4 production translates to revenue.

Samsung's Volume Execution: While Samsung has achieved first-to-mass-production status for HBM4, maintaining yield and quality at scale will be critical to expanding its Nvidia allocation over time.

Supply Tightness: Micron's CEO noted that "the gap between the demand and supply for all of DRAM, including HBM, is really the highest that we have ever seen." This structural tightness should persist beyond calendar 2026, supporting pricing power across the memory industry.

HBM4E and Beyond: Both Samsung and SK hynix are already developing customized HBM4E variants for key customers, with the next generation of technology competition already underway.

The Bottom Line

Samsung's HBM4 mass production launch represents a meaningful inflection in the AI memory supply chain. After ceding ground to SK hynix in HBM3E, Samsung's first-mover status on HBM4 production demonstrates recovered technological competitiveness—even if SK hynix maintains the dominant Nvidia relationship.

For Nvidia, the addition of Samsung as a qualified HBM4 supplier provides crucial supply chain redundancy as it prepares for the Vera Rubin ramp. With AI infrastructure investment accelerating and the HBM market on track to triple by 2028, having multiple high-volume suppliers is increasingly strategic.

The AI memory race is just getting started.

Related