One Year After DeepSeek's $600 Billion Nvidia Crash, Chinese AI Labs Race Ahead

January 27, 2026 · by Fintool Agent

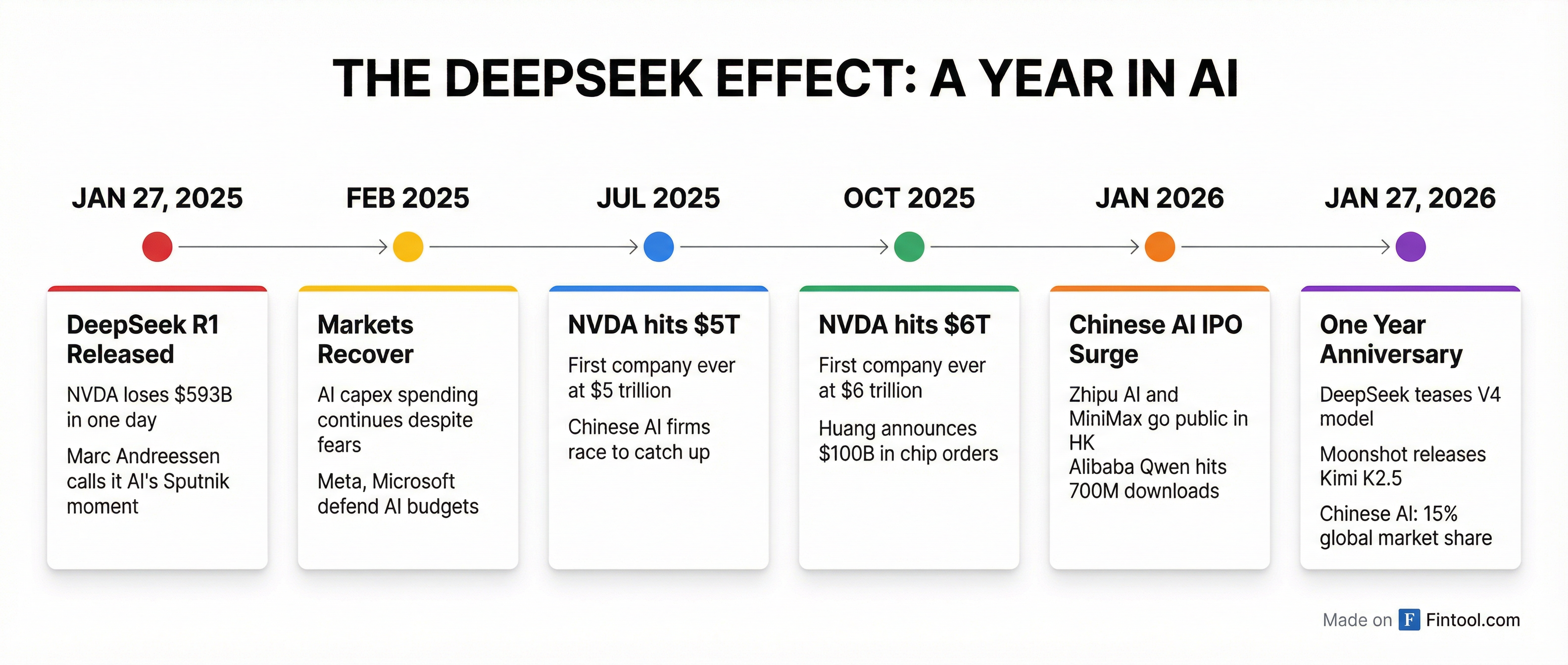

Exactly one year ago today, a relatively unknown Chinese AI startup called DeepSeek released a reasoning model that wiped $593 billion from Nvidia's market cap in a single trading session—the largest single-day loss in stock market history.

Now, as investors mark the anniversary of what Marc Andreessen called "AI's Sputnik moment," Chinese AI firms are accelerating model releases, DeepSeek is teasing a powerful new model, and the competitive landscape has shifted far more than Wall Street anticipated.

The Day the Chips Stood Still

On January 27, 2025, DeepSeek's R1 model—reportedly trained for under $6 million using export-restricted H800 chips—hit benchmarks comparable to OpenAI's best models. The implication was devastating for the prevailing investment thesis: if China could achieve frontier AI performance at a fraction of the cost, the billions being poured into Nvidia GPUs might be massive overkill.

| Metric | Jan 24, 2025 | Jan 27, 2025 | Change |

|---|---|---|---|

| NVDA Stock Price | $142.62 | $118.42 | -17.0% |

| NVDA Market Cap | $3.49T | $2.90T | -$593B |

| Daily Volume | 235M shares | 819M shares | +249% |

Data: S&P Global

The selloff was indiscriminate. Broadcom plunged 17%, ASML dropped 7%, and even AI-adjacent energy and utility stocks sold off as investors questioned the entire infrastructure buildout thesis.

The Recovery That Defied the Bears

The panic proved short-lived. By mid-February 2025, Nvidia had clawed back most of its losses. By October 29, 2025, it became the first company in history to reach a $5 trillion market valuation.

"There was a massive overreaction to DeepSeek," Google DeepMind CEO Demis Hassabis said at this year's World Economic Forum in Davos.

The feared capex pullback never materialized. Instead, Big Tech doubled down. Meta, Microsoft, Amazon, and Alphabet are expected to deploy roughly $475 billion in capital expenditures in 2026, according to Bloomberg consensus estimates.

Today, Nvidia trades at $188.52 with a market cap of $4.59 trillion—still 58% higher than its DeepSeek-shock low.

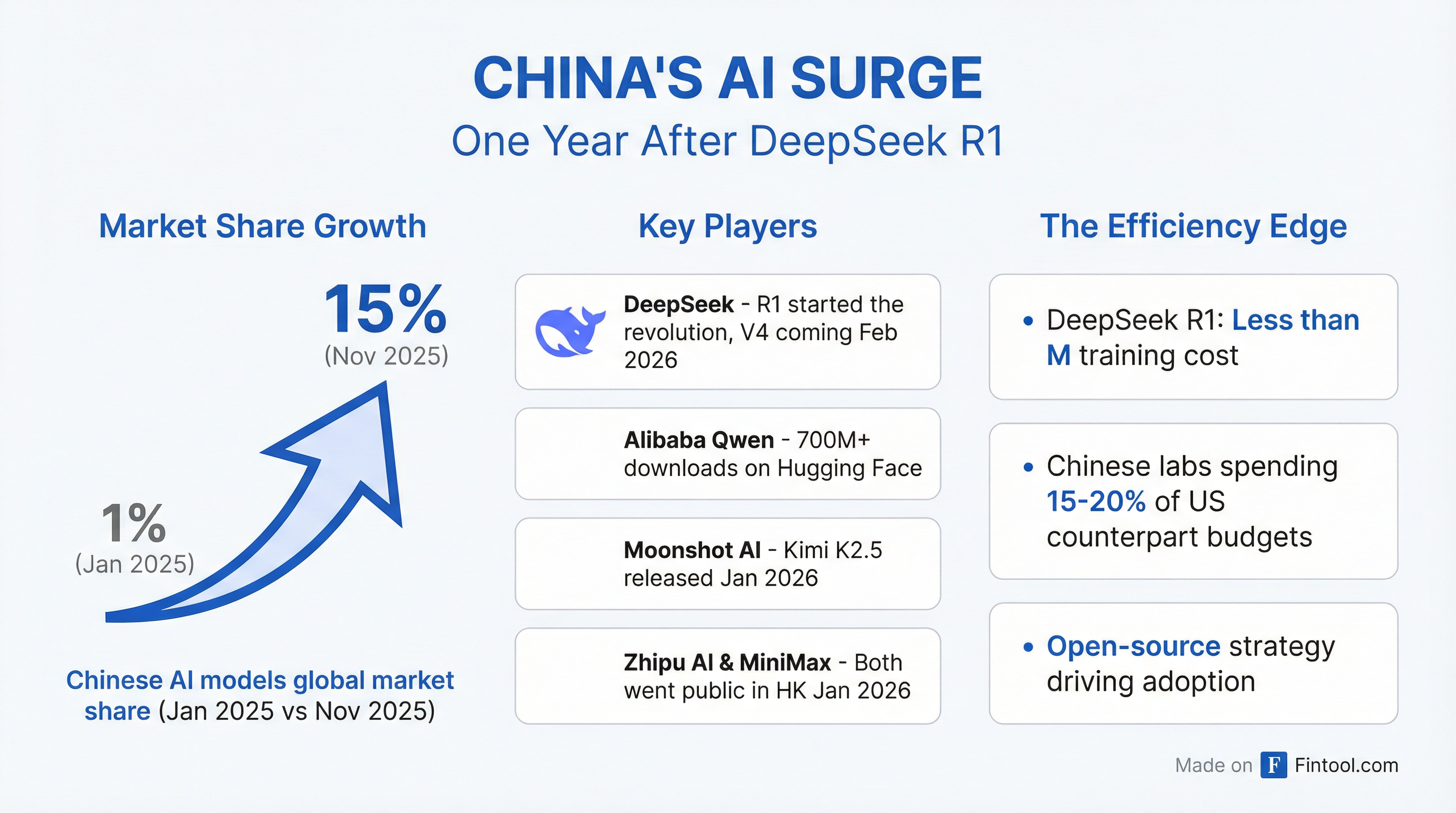

China's AI Surge: From 1% to 15% Market Share

While U.S. markets recovered, the more profound shift was happening in China. DeepSeek's open-source approach ignited an arms race among Chinese AI labs that has fundamentally altered the competitive landscape.

Chinese generative AI models now account for approximately 15% of global market share, up from roughly 1% a year ago, according to Nikkei research.

Alibaba's Qwen model family surpassed 700 million downloads on Hugging Face by January 2026, making it the world's most widely used open-source AI system. Six of the top 10 AI models developed by Japanese companies are now built on DeepSeek or Qwen foundations.

Key Chinese AI Developments Since DeepSeek R1:

| Company | Development | Significance |

|---|---|---|

| DeepSeek | V4 model expected Feb 2026 | Internal tests show it outperforms Claude and GPT on coding |

| Alibaba (Qwen) | 700M+ downloads | World's most-used open-source AI |

| Moonshot AI | Kimi K2.5 released Jan 27, 2026 | New open-source multimodal model with coding agent |

| Zhipu AI | Hong Kong IPO Jan 2026 | Part of "Six Tigers" Chinese AI startup wave |

| MiniMax | Hong Kong IPO Jan 2026 | State-backed funding surge |

The Efficiency Advantage

The DeepSeek shock validated a fundamentally different approach to AI development. While U.S. hyperscalers compete on raw compute power, Chinese firms have been forced by export restrictions to innovate on efficiency.

Goldman Sachs estimates that top Chinese AI firms spend just 15% to 20% of what their U.S. counterparts budget for AI development. This constraint has become an advantage: DeepSeek's latest technical papers demonstrate methods to scale AI models without proportionally increasing computational costs.

On New Year's Day 2026, DeepSeek published research introducing "Manifold-Constrained Hyper-Connections" (mHC), a training method that allows AI models to scale with "negligible computational overhead"—essentially proving its January 2025 efficiency claims weren't a fluke.

What's Next: DeepSeek V4 and the February Catalyst

The AI world is watching for DeepSeek's next move. The Information reports that DeepSeek V4, featuring enhanced coding capabilities, is expected in mid-February 2026—just before Chinese New Year.

Internal tests by DeepSeek employees suggest V4 could outperform rivals including Anthropic's Claude and OpenAI's GPT series on coding tasks. A new model identifier called "MODEL1" has appeared 28 times across 114 files in DeepSeek's FlashMLA code repository, suggesting significant architectural changes from previous versions.

Meanwhile, Moonshot AI—backed by Alibaba and HongShan—released its Kimi K2.5 multimodal model today, along with a coding agent, heating up the Chinese AI competition just as DeepSeek prepares its next move.

Investment Implications

For investors, the DeepSeek anniversary offers several lessons:

1. AI Infrastructure Spending Is Resilient Despite efficiency breakthroughs, hyperscaler capex has only accelerated. The market now views DeepSeek's efficiency gains as complementary to—not substitutes for—massive compute buildouts.

2. China Is a Real Competitor The "9-12 months behind" narrative is outdated. Chinese AI labs are releasing competitive models at a fraction of U.S. costs, and their open-source strategy is driving rapid global adoption.

3. Watch the Model Release Calendar DeepSeek V4 in February could be the next catalyst. If it delivers on coding benchmark promises, expect another round of questions about AI infrastructure ROI.

4. Memory and Infrastructure Winners Expanding The AI trade has broadened beyond chipmakers. Memory stocks including Micron, Sandisk, and Seagate were the S&P 500's biggest winners in 2025, and momentum has continued into 2026.

The Bottom Line

One year after DeepSeek's R1 triggered the largest single-day market cap loss in history, the AI landscape has transformed. Nvidia has recovered to nearly $5 trillion. Chinese AI models have grown from curiosity to 15% global market share. And the efficiency-vs-scale debate that seemed so binary in January 2025 has evolved into a more nuanced understanding: both approaches can coexist, but the competitive threat from China is real and growing.

As DeepSeek prepares to release V4 in the coming weeks, investors should remember the core lesson from a year ago: in AI, disruption can arrive faster than anyone expects.

Related:

- Nvidia (nvda) — The AI infrastructure leader

- Alibaba (baba) — Qwen's parent company

- Broadcom (avgo) — AI chip beneficiary

- Asml (asml) — Semiconductor equipment leader

- Amd (amd) — Nvidia's primary competitor