Earnings summaries and quarterly performance for ADVANCED MICRO DEVICES.

Executive leadership at ADVANCED MICRO DEVICES.

Lisa Su

Chair, President and Chief Executive Officer

Ava Hahn

Senior Vice President, General Counsel and Corporate Secretary

Darren Grasby

Executive Vice President, Chief Sales Officer

Forrest Norrod

Executive Vice President and General Manager, Data Center Solutions Business Unit

Jack Huynh

Senior Vice President and General Manager, Computing and Graphics Business Group

Jean Hu

Executive Vice President, Chief Financial Officer and Treasurer

Mark Papermaster

Executive Vice President and Chief Technology Officer

Philip Guido

Executive Vice President, Chief Commercial Officer

Board of directors at ADVANCED MICRO DEVICES.

Research analysts who have asked questions during ADVANCED MICRO DEVICES earnings calls.

Aaron Rakers

Wells Fargo

9 questions for AMD

Joshua Buchalter

TD Cowen

9 questions for AMD

Timothy Arcuri

UBS

9 questions for AMD

Vivek Arya

Bank of America Corporation

9 questions for AMD

Ross Seymore

Deutsche Bank

8 questions for AMD

Stacy Rasgon

Bernstein Research

7 questions for AMD

Thomas O’Malley

Barclays Capital

6 questions for AMD

Ben Reitzes

Melius Research LLC

4 questions for AMD

Joe Moore

Morgan Stanley

4 questions for AMD

Joseph Moore

Morgan Stanley

4 questions for AMD

C J Muse

Tanner Fitzgerald

3 questions for AMD

CJ Muse

Cantor Fitzgerald

3 questions for AMD

Harlan Sur

JPMorgan Chase & Co.

3 questions for AMD

Antoine Chkaiban

New Street Research

2 questions for AMD

Jim Schneider

Goldman Sachs

2 questions for AMD

Tom O'Malley

Barclays

2 questions for AMD

Toshiya Hari

Goldman Sachs Group, Inc.

2 questions for AMD

Benjamin Reitzes

Melius Research

1 question for AMD

Blayne Curtis

Jefferies Financial Group

1 question for AMD

Christopher Muse

Cantor Fitzgerald

1 question for AMD

Harsh Kumar

Piper Sandler & Co.

1 question for AMD

Recent press releases and 8-K filings for AMD.

- AMD signed 6 GW multi-generation AI infrastructure agreements with Meta and OpenAI featuring performance-based warrants to align roadmaps and accelerate chip purchases.

- The MI450 GPU family will ramp in H2 2026 via the Helios rack-scale platform—bolstered by the ZT Systems acquisition—to address heterogeneous training and inference workloads.

- Strong demand for both CPUs and GPUs underpins an ambitious 35% CAGR revenue growth target toward $20 EPS by 2029, with data-center AI revenues projected to grow at an 80% CAGR over the next 3–5 years.

- Supply chain is currently tight from under-forecasted demand, but AMD has secured adequate CoWoS capacity and is expanding wafer supply with foundry partners; memory price volatility may pressure PCs while data-center demand remains robust.

- AMD secured 6 GW AI infrastructure partnerships with Meta (semi-custom GPU deal) and OpenAI, using performance-based warrants to deepen multi-generational collaborations and accelerate ecosystem development.

- Unveiled ramp plan for MI450 AI GPUs, the Helios rack-scale system (via ZT Systems acquisition), and a chiplet-based architecture supporting flexible workload optimization and integrated UALink networking over Ethernet.

- Maintains an ambitious guidance of 35% CAGR over the next 3–5 years targeting over $20 EPS, and anticipates capturing part of a $120 billion AI revenue opportunity within a $1 trillion end-of-decade market.

- Reports supply tightness in CPU compute driven by unexpected market growth, but affirms ample production capacity and plans to expand wafer supply through 2026–2027 to meet durable demand.

- AMD deepens AI infrastructure partnerships with multi-generational 6 GW semi-custom GPU deals with Meta and OpenAI, including performance-based warrants aligning incentives.

- Data center AI revenue from MI300/MI350 exceeded $2 billion per quarter, with AMD targeting an 80% CAGR over the next 3–5 years as MI450 enters ramp.

- AMD is launching MI450 GPUs with integrated Helios rack-scale infrastructure (via ZT acquisition), with Q3 pilots and sharp Q4 2026 ramp, leveraging open standards co-developed with Meta.

- AMD reports robust and durable demand across CPU and GPU product lines, citing supply tightness on CPUs due to under-forecasted compute needs, and plans to expand supply in 2026–27.

- AMD partners with Wind River to deliver the first unified O-RAN and AI-RAN platform in the industry by integrating AMD EPYC processors into the Wind River Cloud, aiming to reduce infrastructure costs and complexity

- The solution allows operators to run virtualized RAN functions and AI inference simultaneously on shared hardware, enabling real-time AI capabilities at the network edge such as traffic prediction, anomaly detection, and energy optimization

- Engineered for automated lifecycle management, resilience, and scalable deployment across distributed sites, the platform supports AI feature upgrades without replacing existing hardware

- The collaboration includes joint optimization of the AI-RAN software stack and hardware, with live demonstrations at MWC Barcelona 2026

- AMD and Nutanix enter a multi-year strategic partnership to jointly develop an open, full-stack agentic AI infrastructure platform.

- AMD will invest $150 million in Nutanix common stock at $36.26 per share and provide up to $100 million for joint R&D and go-to-market collaboration.

- The co-engineered platform will optimize AMD EPYC™ CPUs and AMD Instinct™ GPUs, integrating AMD ROCm™ software and AMD Enterprise AI into Nutanix Cloud and Kubernetes platforms.

- The first jointly developed platform is expected to launch in late 2026, targeting scalable, production-ready agentic AI across data center, hybrid, and edge environments.

- U.S. tech shares and semiconductors rebounded as AI software partnerships—including Anthropic’s plug-ins with Salesforce, FactSet, Thomson Reuters, Intuit and Infosys—reversed a recent selloff.

- AMD agreed to sell up to $60 billion in AI chips to Meta over five years and grant Meta an option to buy up to 10% of AMD.

- FactSet, Thomson Reuters and Salesforce shares climbed about 5.9%, 11.5% and 4.1%, respectively, after Anthropic’s announcements.

- Federal Reserve governor Lisa Cook weighed in on AI’s potential labor-market impacts during policy discussions.

- NAPC Defense announced $38.17 million in new U.S. government task orders extending through 2032.

- Clarivate (NYSE: CLVT) reported Q4 2025 revenue of $617.0 million with net income of $3.1 million, full-year revenue of $2.46 billion, and 2026 guidance of $2.30 billion–$2.42 billion in revenue, $980 million–$1.04 billion in Adjusted EBITDA, and $365 million–$435 million in free cash flow.

- AMD and Meta Platforms unveiled a multi-year, multi-generation agreement to deploy up to 6 gigawatts of AMD Instinct GPUs, including performance-based warrants for up to 160 million AMD shares tied to shipment and stock price milestones, with shipments beginning H2 2026.

- Multi-year, multi-generation agreement to deploy 6 GW of AMD Instinct GPUs, with initial shipments beginning in H2 2026 under the Helios rack-scale architecture.

- Co-engineered custom MI450-based GPU optimized for Meta workloads, integrated with Helios and AMD’s 6th Gen EPYC Venice CPU plus the Zen 6 Verano processor.

- Deal includes performance-based warrants for up to 160 million AMD shares and is expected to generate double-digit billions in data center AI revenue per gigawatt, ramping from H2 2026.

- Partnership deepens AMD’s end-to-end AI platform alongside collaborations with OpenAI and Oracle, strengthening its silicon-to-software ecosystem.

- 6 GW multi-year agreement for AMD Instinct GPUs, with initial 1 GW shipments in H2 2026, using Helios rack-scale architecture and custom MI450 GPUs alongside 6th Gen EPYC “Venice” CPUs.

- Co-engineering of a custom MI450-based GPU accelerator optimized for Meta workloads; MI450 series and Helios are in validation and on track for production shipments in H2 2026.

- Deepening EPYC CPU partnership: Meta to be lead customer for 6th Gen EPYC Venice at launch and new Zen 6 “Verano,” targeting enhanced performance per watt and TCO.

- 160 million shares performance-based warrant issued to Meta, vesting with GPU deployment and stock-price milestones; Meta deal expected to generate double-digit billions of data center AI revenue per GW and be accretive to non-GAAP EPS.

- AMD announced a multi-year, multi-generation agreement with Meta to deploy 6 GW of Instinct GPUs, beginning with 1 GW of custom MI450-based accelerators and 6th Gen EPYC Venice CPUs in H2 2026 using its Helios rack-scale architecture.

- AMD issued Meta a performance-based warrant for up to 160 million shares of AMD common stock, vesting in tranches tied to GPU shipments and AMD stock price thresholds, aligning long-term interests and accretive to non-GAAP EPS.

- The deployment is expected to generate double-digit billions of dollars per gigawatt in data center AI revenue starting H2 2026, contributing to AMD’s target of >80% CAGR in data center AI and >$20 EPS within 3–5 years.

- AMD is deepening its CPU collaboration by naming Meta a lead customer for its 6th Gen EPYC Venice processor at launch and co-optimizing the Zen 6 Verano design, highlighting EPYC’s role in AI model development and inference.

Fintool News

In-depth analysis and coverage of ADVANCED MICRO DEVICES.

AMD Lands $60 Billion Meta Deal, Giving Facebook Parent Option to Own 10% of Chipmaker

Meta and AMD Strike $100 Billion AI Chip Deal in Challenge to Nvidia's Dominance

AMD Borrows Nvidia's AI Playbook With $300M Crusoe Loan Guarantee

AMD Crashes 17% Despite Q4 Beat—Cathie Wood's ARK Buys $28M

AMD Crashes 17% in Worst Day Since 2018 as Q1 Guidance Spooks Investors

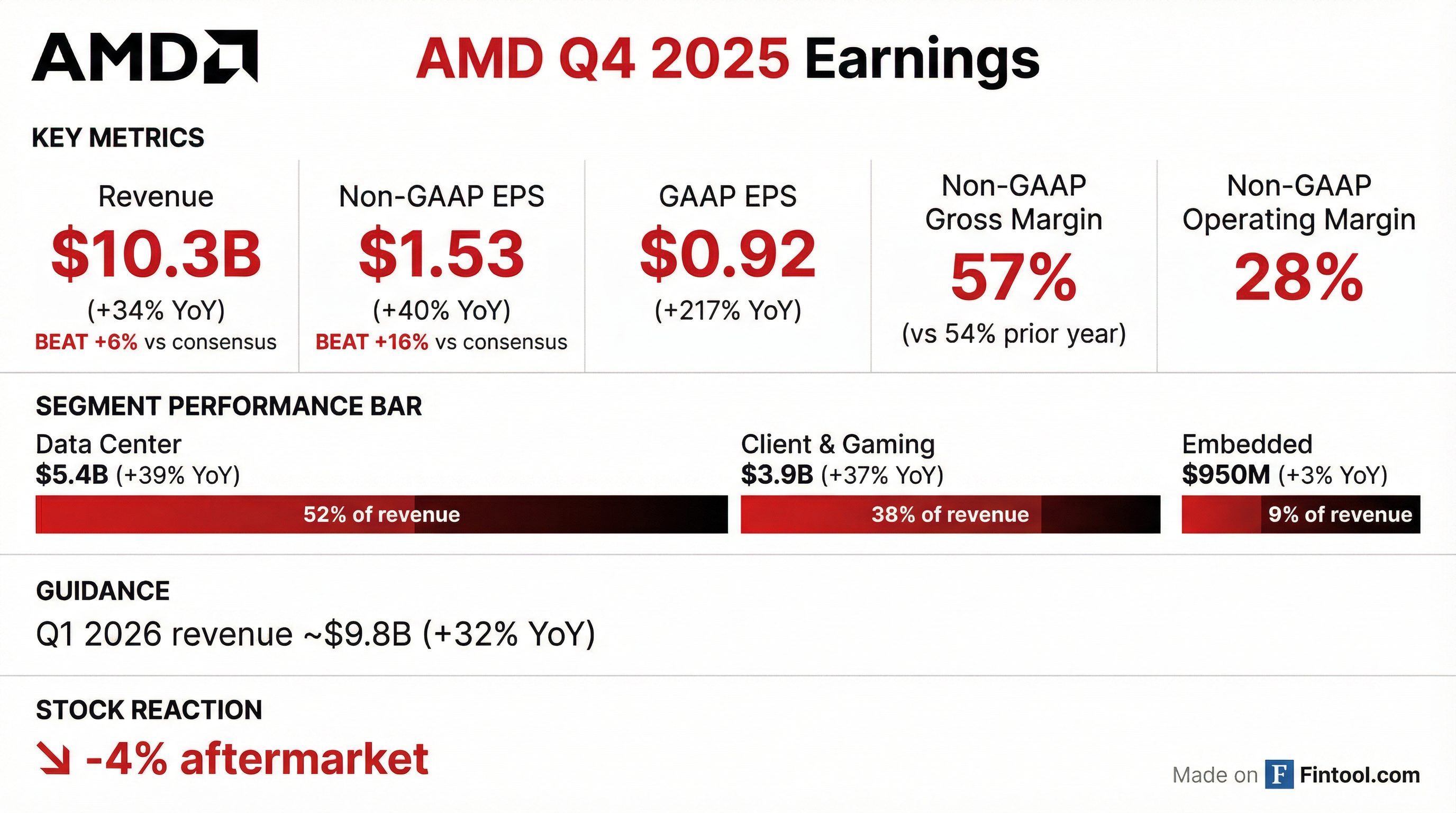

AMD Posts Record $10.3B Quarter, Stock Falls 5% as AI Expectations Outpace Results

Quarterly earnings call transcripts for ADVANCED MICRO DEVICES.

Ask Fintool AI Agent

Get instant answers from SEC filings, earnings calls & more