Microsoft CTO Reveals $150K-Per-Year Coding Agent Costs as AI Demand Outpaces Supply

February 3, 2026 · by Fintool Agent

Microsoft CTO Kevin Scott put a number on the AI infrastructure frenzy today: the most ambitious engineering teams at Microsoft are spending $150,000 per year per developer on inference costs alone to run coding agents at full capacity. The revelation, shared during a conversation with Cisco CEO Chuck Robbins at the Cisco AI Summit 2026 in San Francisco, underscores just how much compute demand lies ahead—and why Microsoft expects to remain supply-constrained for the foreseeable future.

"The thing that limits them is their available attention to manage the full complexity of what the agents are doing," Scott explained. "And a very tiny slice of even the community of software developers have that degree of access and ambition right now for the product. But they all could benefit from it."

Microsoft shares closed down 3.2% at $409.76 today, extending losses following last week's mixed earnings reaction where investors questioned whether the company's massive AI capital expenditure—now running at nearly $30 billion per quarter—would translate into commensurate revenue growth.

The $150K Developer and the Inference Explosion

Scott's $150K figure represents a paradigm shift in how software development economics work. Traditional developer tooling costs a few hundred dollars per year. Now, for teams pushing the frontier of AI-assisted development, inference costs rival—or exceed—salary expenses.

The implications for hyperscaler demand are staggering. If even a fraction of the estimated 30 million professional developers worldwide adopted similar workflows, annual inference spending could approach hundreds of billions of dollars—dwarfing current cloud revenue from all sources.

"I just don't see how the demand for inference is going to go down," Scott said. "And it's hard to imagine, given what the silicon situation is, the hardware situation, like how difficult it is to build data centers and deploy power and all the things that you want to do—how you get ahead of that anytime soon."

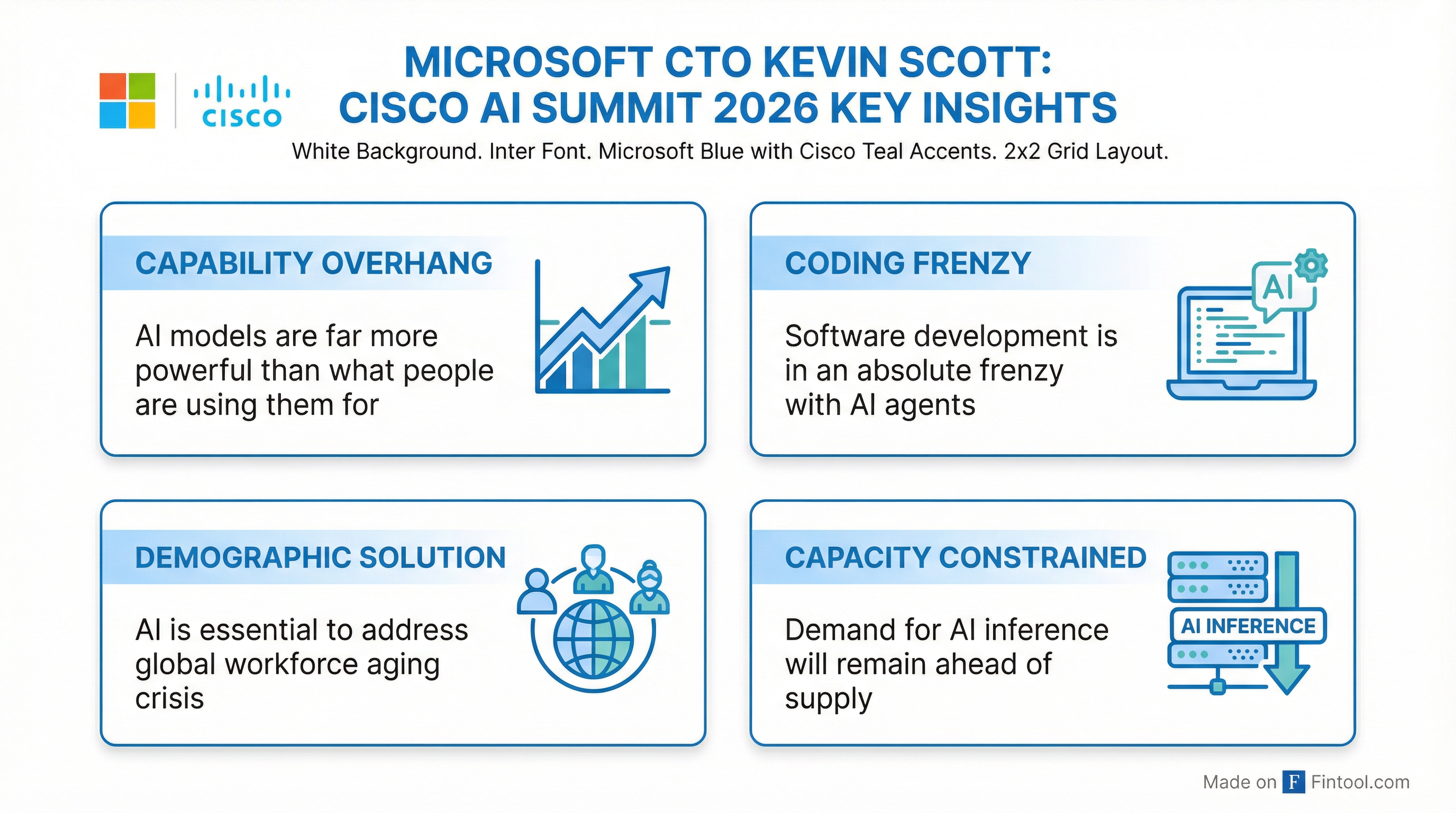

The Capability Overhang: Models Already Far Ahead of Users

Scott introduced a concept he's been "diatribing about inside and outside of Microsoft for a few years"—what he calls the capability overhang. Current AI models, he argued, are dramatically more powerful than how people are actually using them.

"The models are already way more powerful than what people are using them for," Scott said. "I think the closest glimpse that you're seeing to true, full utilization of model capability is in coding, and it's an absolute frenzy right now in the state of software development."

This overhang represents both an opportunity and a challenge for Microsoft. On one hand, it suggests substantial latent demand as enterprises learn to better utilize AI capabilities. On the other, it means the current capacity constraints—which have persisted for over a year—may intensify rather than ease as adoption accelerates.

Software Engineering Transformed—For Better or Worse

Scott painted a picture of software development in flux. While AI dramatically accelerates code production, it creates new bottlenecks around review and quality assurance.

"You can produce a lot of code with these coding agents right now. There's nothing to say that it's good code," Scott cautioned. "You can just sort of spray a bunch of—" before his interviewer interjected: "I think review has become the bottleneck."

The shift, Scott suggested, moves software engineering from vocational coding skills toward more foundational computer science thinking: algorithmic decomposition, problem selection, and the ability to punch through abstraction layers when AI-generated code doesn't work as expected.

"The vocational aspects of the software engineering job are just going to change so radically over the next handful of years that it's going to be unrecognizable," he predicted.

For investors, this transformation has implications beyond Microsoft. Companies across the technology sector—from code editors to developer tools to education providers—face potential disruption as AI reshapes the $700 billion global software development industry.

AI as Demographic Lifeline: The Optimistic Case

Scott's most provocative framing positioned AI not as a workforce threat but as an essential response to a global demographic crisis that "we just don't talk about all that much."

He cited a conversation with a Japanese educational leader who noted that 2026 marks peak high school graduation in Japan—the maximum number of students the country will ever graduate in a single year. From here, demographics dictate perpetual decline.

"Japan is in population decline. It's a little bit ahead of where other places are, but like China, Korea as well, a bunch of countries in Western Europe, the United States sans immigration would also be in population decline over the next handful of decades," Scott explained.

The implication: without technological intervention, developed economies face structural labor shortages that threaten living standards. AI, in Scott's framing, arrives just in time.

"Thank God AI has come along when it has, that we actually have this and a handful of other technological things happening in the world right now that will give us at least a partial answer for what you do when, over the next handful of decades, you don't have the same labor dynamics as you've had over the whole course of recorded human history."

Microsoft's Platform DNA and Silicon Strategy

Scott, now in his 10th year at Microsoft, emphasized the company's fundamental identity as a platform builder—a characteristic he sees as particularly suited to the current AI moment.

"You have to understand about Microsoft that it's a platform company. We don't really think about doing anything unless it's building something that someone else can pick up and then build another thing on top of," he said.

On the silicon front, Scott confirmed Microsoft's diversified approach: the company runs "gigantic fleets" of Nvidia, Amd, and proprietary Maia chips, with deployment decisions driven by cost efficiency rather than any single vendor relationship.

"Whatever is most cost efficient is the thing that we are going to go deploy at scale," he stated.

Infrastructure Spending Shows No Signs of Slowing

Microsoft's capital expenditure trajectory supports Scott's capacity constraint commentary. The company has dramatically ramped infrastructure spending over the past year:

| Metric | Q3 2025 | Q4 2025 | Q1 2026 | Q2 2026 |

|---|---|---|---|---|

| Revenue ($B) | $70.1 | $76.4 | $77.7 | $81.3 |

| Operating Income ($B) | $32.0 | $34.3 | $38.0 | $38.3 |

| Capital Expenditure ($B) | $16.7* | $17.1* | $19.4* | $29.9* |

*Values retrieved from S&P Global

CFO Amy Hood confirmed on last week's earnings call that "customer demand continues to exceed our supply" and that capacity constraints would persist through fiscal 2026.

The OpenAI Partnership: "Democratization" as Legacy

Scott expressed particular pride in his role architecting Microsoft's partnership with OpenAI—framing the achievement not in financial terms but as democratizing access to powerful AI.

"The thing that I'm especially proud of is I like a world where you actually have the capabilities of these platforms out in the open," Scott said. "Where it's not just one Silicon Valley company that is building this thing on their infrastructure with their smart people, and then they decide what is going to get done with it."

He continued: "It's out there for anyone who can go sign up for an API key to go start building something on top of. And that democratization of powerful AI capability is a thing—whatever small role I had in that—that I'm proud of."

The comments come as Microsoft navigates an increasingly complex competitive landscape. OpenAI now accounts for 45% of Microsoft's commercial remaining performance obligation, even as Microsoft has diversified through a $5 billion investment in Anthropic and expanded model partnerships.

What to Watch

For investors tracking Microsoft's AI trajectory, Scott's comments highlight several key dynamics:

-

Inference economics at scale: The $150K/developer figure suggests enterprise AI budgets could grow dramatically as coding agents mature. Watch for disclosure of AI-specific revenue contribution in coming quarters.

-

Capacity constraint duration: Scott's conviction that constraints will persist indefinitely suggests infrastructure spending remains on an upward trajectory, with margin implications for the cloud business.

-

Developer adoption curves: The "capability overhang" implies current AI revenue substantially understates potential demand. Enterprise AI adoption metrics—like the 15 million M365 Copilot users disclosed last week—will signal how quickly the gap closes.

-

Silicon diversification payoff: Microsoft's multi-vendor chip strategy could prove advantageous as AI demand fragments across training and inference workloads with different performance requirements.

The Cisco AI Summit continues through the afternoon with appearances from Jensen Huang (NVIDIA), Sam Altman (OpenAI), and Matt Garman (AWS).