OpenAI Seeks Nvidia Chip Alternatives as Codex Speed Issues Emerge

February 2, 2026 · by Fintool Agent

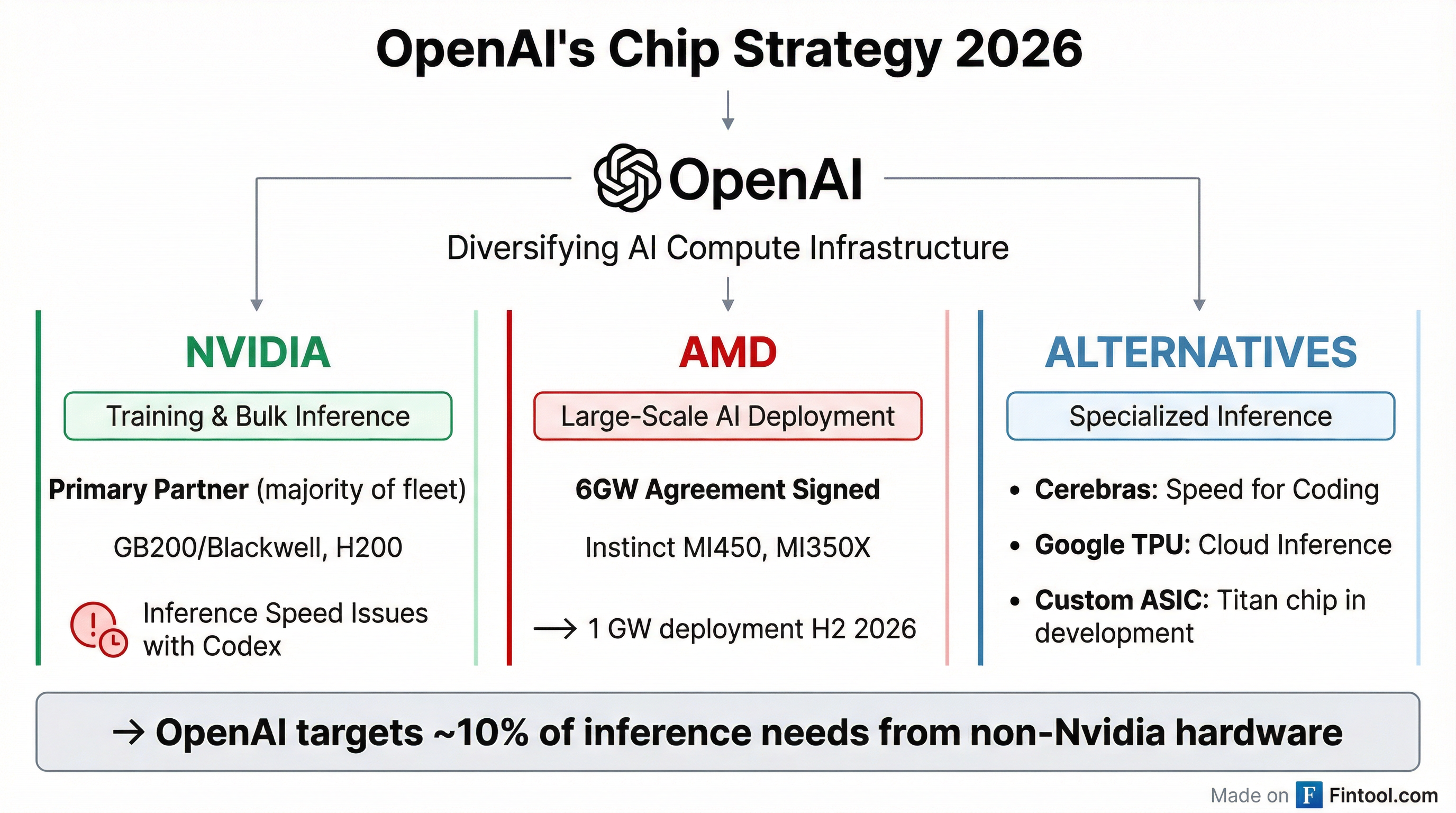

Openai is dissatisfied with some of Nvidia-2.89%'s latest AI chips and has been exploring alternatives since last year, according to Reuters, potentially disrupting the relationship between the two most consequential companies in the AI boom.

The ChatGPT maker's search for GPU alternatives focuses on companies building chips with large amounts of embedded memory (SRAM) that can deliver faster inference speeds—the process where AI models respond to user queries. Inside OpenAI, the issue became particularly visible in Codex, the company's AI coding product, which staff attributed partially to limitations in Nvidia's GPU architecture.

Nvidia-2.89% shares fell 2.9% to close at $185.61 on the news, while rival Amd+4.03%—which has a signed 6-gigawatt GPU deal with OpenAI—rose 4.5% to $246.27.

The Inference Problem

Nvidia remains dominant in chips for training large AI models, but inference has become a new competitive battleground. The distinction matters: training builds AI models by processing vast datasets, while inference runs those trained models to answer user questions in real time.

"Customers continue to choose NVIDIA for inference because we deliver the best performance and total cost of ownership at scale," Nvidia said in a statement. OpenAI separately confirmed it relies on Nvidia to power "the vast majority of its inference fleet."

But seven sources told Reuters that OpenAI is not satisfied with the speed at which Nvidia's hardware can deliver responses for specific workloads like software development and AI-to-AI communication. The company needs new hardware that would eventually provide about 10% of OpenAI's inference computing needs.

The technical issue centers on memory architecture. Inference requires fetching data from memory more frequently than training, and Nvidia's GPUs use external high-bandwidth memory (HBM) which adds latency. Alternative chip designs from Cerebras and others embed large amounts of SRAM directly on the chip, reducing the time spent waiting for data.

OpenAI's Multi-Supplier Strategy

OpenAI is building a diversified chip supply chain across several partners:

AMD: Signed a 6-gigawatt definitive agreement in October 2025, with the first 1 GW deployment of Instinct MI450 GPUs beginning in H2 2026. AMD issued OpenAI warrants for up to 160 million shares (roughly 10% stake at $0.01 per share) that vest as deployment milestones are met.

"This partnership is a major step in building the compute capacity needed to realize AI's full potential," CEO Sam Altman said at the announcement. "AMD's leadership in high-performance chips will enable us to accelerate progress."

Cerebras: In a January 30 call with reporters, Altman said OpenAI's deal with Cerebras will help meet demand for speed in coding workloads. "Customers using OpenAI's coding models will put a big premium on speed for coding work," Altman noted, adding that speed is less critical for casual ChatGPT users.

Google TPU: OpenAI recently began renting Google's tensor processing units to power ChatGPT and other products—the first time it has used non-Nvidia chips in a meaningful way for production inference.

Custom Silicon: OpenAI is developing a proprietary chip codenamed "Titan" on TSMC's N3 process, targeting deployment by end-2026, with a second-generation version planned for TSMC's advanced A16 node.

Nvidia's Response

Nvidia has moved to shore up its inference capabilities. In December 2025, the company acquired select assets and licensed core inference technology from Groq in an all-cash deal valued at roughly $20 billion—Nvidia's largest acquisition to date.

Groq's inference designs—including a single-core petaop processor that avoids HBM memory bottlenecks—target exactly the low-latency, real-time use cases that OpenAI is seeking. Bank of America maintained its buy rating on Nvidia with a $275 price target following the deal.

Meanwhile, Nvidia CEO Jensen Huang denied any tension with OpenAI over a planned investment. "It's nonsense to say I'm unhappy with OpenAI," Huang told reporters in Taipei. "We are going to make a huge investment in OpenAI. I believe in OpenAI, the work that they do is incredible."

The originally announced $100 billion Nvidia-to-OpenAI investment remains at the letter-of-intent stage. Huang said Nvidia would invest "a great deal of money, probably the largest investment we've ever made," but clarified it would be "nothing like" $100 billion.

Financial Context

Nvidia remains the undisputed leader in AI infrastructure, with quarterly revenues that dwarf competitors:

| Metric | Q4 2025 | Q1 2026 | Q2 2026 | Q3 2026 |

|---|---|---|---|---|

| Revenue ($B) | $39.3 | $44.1 | $46.7 | $57.0 |

| Net Income ($B) | $22.1 | $18.8 | $26.4 | $31.9 |

| Gross Margin | 73.0% | 60.5% | 72.4% | 73.4% |

The company's Q3 FY2026 revenue of $57 billion marked its 12th consecutive earnings beat, demonstrating continued dominance in AI training and the bulk of inference workloads.

What to Watch

Near-term catalysts:

- AMD's first 1 GW deployment to OpenAI scheduled for H2 2026

- OpenAI's Cerebras integration timeline for Codex

- Nvidia Q4 FY2026 earnings commentary on inference competition

Longer-term questions:

- Can OpenAI's custom Titan chip reach production scale?

- Will other hyperscalers follow OpenAI's multi-supplier approach?

- Does Nvidia's Groq acquisition address the SRAM-based inference gap?

The battle for AI inference may be just beginning. While Nvidia's training dominance appears secure, the fastest-growing segment of AI compute—running models for billions of users—is now openly contested.