Inferact Raises $150M at $800M Valuation to Commercialize vLLM

January 22, 2026 · by Fintool Agent

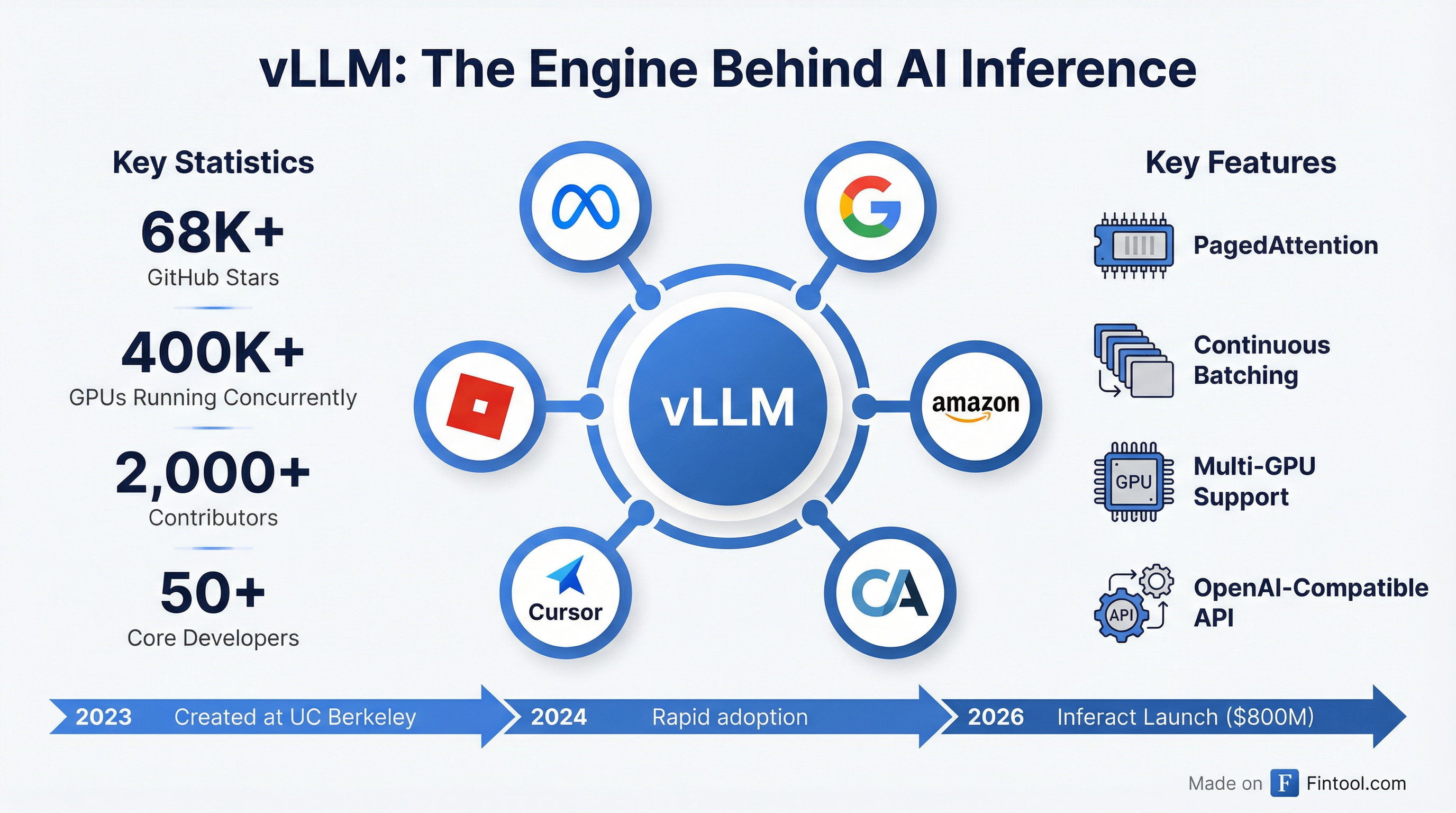

The creators of vLLM, the open-source inference engine running on over 400,000 GPUs worldwide, have raised $150 million in seed funding at an $800 million valuation to commercialize the technology through a new startup called Inferact.

The round was co-led by Andreessen Horowitz and Lightspeed Venture Partners, with participation from Sequoia Capital, Altimeter Capital, Redpoint Ventures, and ZhenFund.

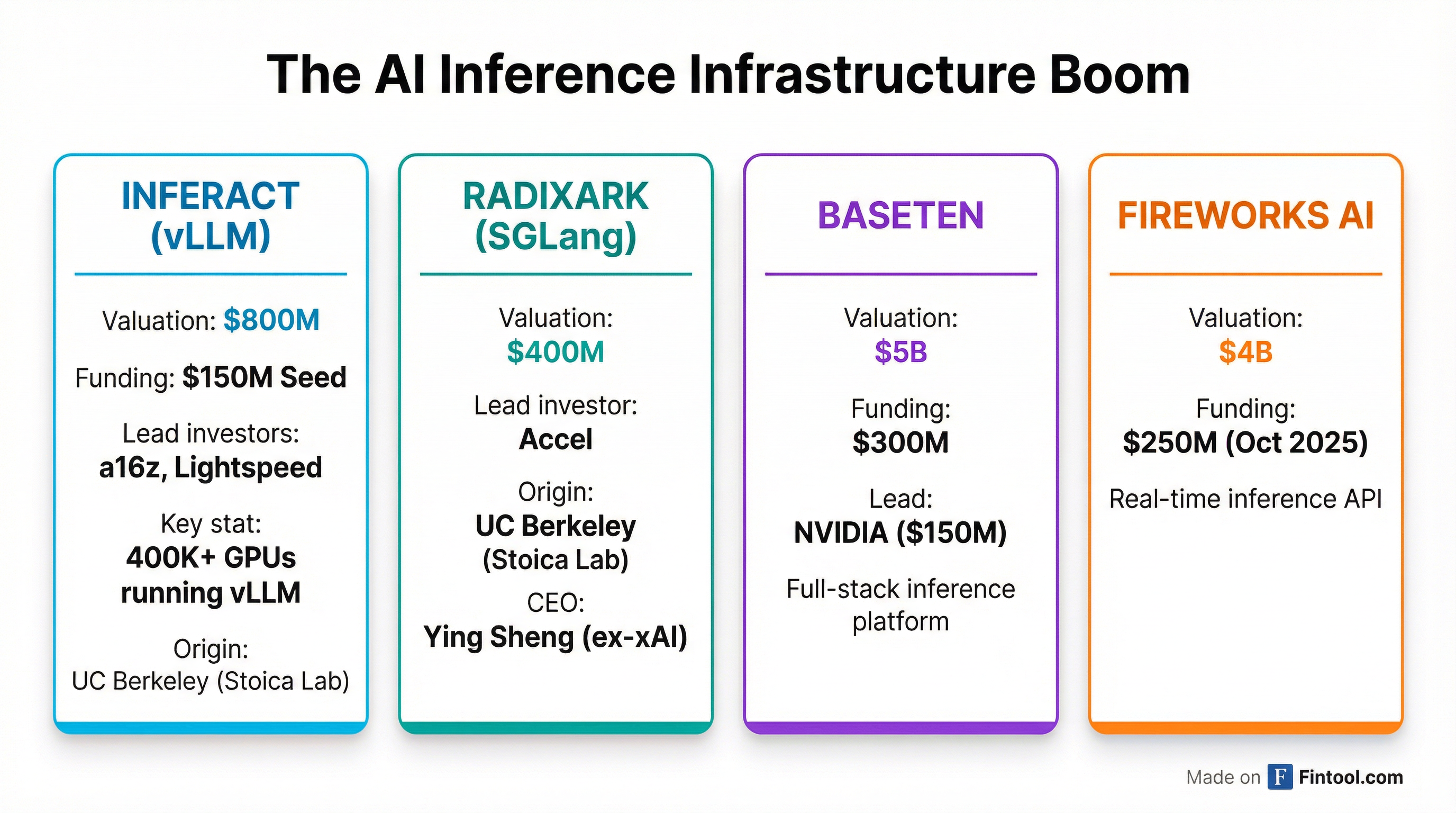

The announcement comes just one day after RadixArk, the commercial entity behind rival inference project SGLang, was reported to have secured funding at a $400 million valuation led by Accel. Both projects originated in the same UC Berkeley lab led by Databricks co-founder Ion Stoica.

The Berkeley Lab That Built AI's Infrastructure Layer

vLLM was created in 2023 at UC Berkeley's Sky Computing Lab, the same research group that produced Apache Spark and Ray—technologies that now underpin much of the modern data infrastructure stack. The project quickly became the de facto standard for serving large language models efficiently.

Today, vLLM runs at massive scale:

- 400,000+ GPUs running the software concurrently worldwide

- 68,000+ GitHub stars, making it one of the largest open-source AI projects

- 2,000+ contributors from academia and industry

- 50+ core developers maintaining the codebase

- Used in production by Meta, Google, Amazon, and Character.ai

"vLLM is the leading open source inference engine and one of the biggest open source projects of any kind," Andreessen Horowitz wrote in its investment announcement. "Many of the top open source AI labs and hardware companies even contribute to vLLM directly to ensure compatibility on day 1."

Why Inference Is the New Battleground

The funding surge reflects a fundamental shift in AI economics. While training models captures headlines, inference—actually running those models to generate outputs—increasingly dominates costs as AI applications scale.

"The focus in AI shifts from training models to deploying them in applications," TechCrunch noted in its coverage. "Technologies like vLLM and SGLang that make these AI tools run faster and more affordably are attracting investor attention."

vLLM's core innovation, PagedAttention, optimizes how AI models manage memory during inference. Combined with continuous batching and support for virtually all major hardware platforms—NVIDIA, AMD, Google TPUs, Intel Gaudi, and AWS Neuron—the technology has become essential infrastructure for anyone deploying large language models at scale.

Inferact CEO Simon Mo, one of vLLM's original creators, told Bloomberg that existing users include Amazon's cloud service and shopping application.

A Crowded but Exploding Market

Inferact enters a market seeing unprecedented capital inflows:

| Company | Valuation | Recent Funding | Lead Investors | Focus |

|---|---|---|---|---|

| Baseten | $5.0B | $300M | NVIDIA ($150M) | Full-stack inference platform |

| Fireworks AI | $4.0B | $250M (Oct 2025) | - | Real-time inference API |

| Inferact (vLLM) | $800M | $150M seed | a16z, Lightspeed | High-throughput serving |

| RadixArk (SGLang) | $400M | Undisclosed | Accel | General inference acceleration |

The funding represents a bet that infrastructure companies—not just model builders—will capture significant value as AI scales. "Investing in the vLLM community is an explicit bet that the future will bring incredible diversity of AI apps, agents, and workloads running on a variety of hardware platforms," a16z stated.

The Berkeley Connection

The simultaneous emergence of Inferact and RadixArk highlights the outsize influence of Ion Stoica's UC Berkeley lab on AI infrastructure. Stoica, who co-founded Databricks and helped create Apache Spark, has incubated multiple foundational technologies for distributed computing and AI.

RadixArk CEO Ying Sheng left Elon Musk's xAI to lead the SGLang commercialization, with angel backing from Intel CEO Lip-Bu Tan.

Both companies plan to maintain their open-source projects while building commercial products on top:

- Inferact will continue developing vLLM while building what it calls a "universal inference layer" working with existing providers

- RadixArk will support SGLang while commercializing optimization services

"This investment is especially close to our hearts because we've been small-scale supporters of the vLLM project since 2023," a16z noted. "The first vLLM meetup was hosted in our office, and the first a16z open source AI grant was made to the vLLM team."

What to Watch

The race to dominate AI inference infrastructure is accelerating. Key questions for investors:

-

Market consolidation: Will major cloud providers (Amazon, Google, Microsoft) acquire these startups or build competing solutions?

-

Hardware dependencies: How will relationships with Nvidia and other chip makers evolve as inference workloads grow?

-

Open-source sustainability: Can companies like Inferact and RadixArk monetize effectively while maintaining open-source commitments?

-

Enterprise adoption: Which inference platform will become the standard for enterprise AI deployments?

The $150 million seed round—likely one of the largest seed rounds in AI infrastructure—signals that investors believe the inference layer will be as valuable as the models themselves.