Meta and Nvidia Ink Multibillion-Dollar Deal for 'Millions' of AI Chips

February 17, 2026 · by Fintool Agent

Meta Platforms and Nvidia announced a sweeping expansion of their AI infrastructure partnership on Tuesday, with Meta committing to deploy "millions" of Nvidia processors—including current Blackwell GPUs, forthcoming Rubin chips, and standalone Grace CPUs—across its data centers. The multiyear, multi-generational deal, valued at "tens of billions of dollars" according to sources familiar with the arrangement, marks Nvidia's largest known customer commitment and underscores the chip giant's dominance despite intensifying competition from custom silicon.

Nvidia shares rose 1.2% to $184.97 in regular trading on the news, while Meta closed essentially flat at $639.29. Both stocks extended gains in after-hours trading.

What's in the Deal

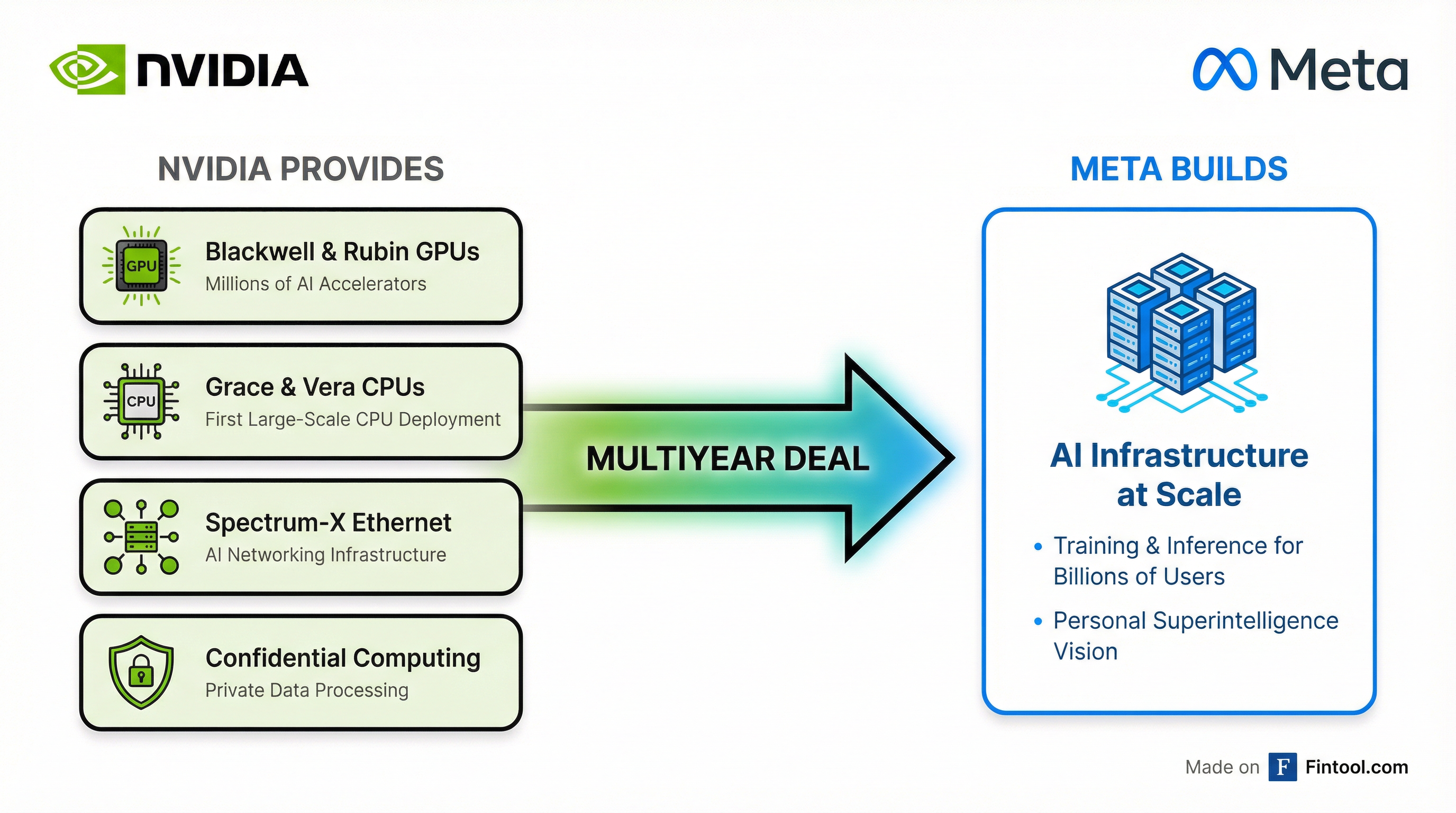

The partnership spans Nvidia's full product portfolio:

GPU Accelerators: Meta will deploy "millions" of Nvidia's Blackwell GPUs currently ramping and Rubin-generation accelerators slated for 2027 and beyond. These chips will power both AI model training and inference across Meta's recommendation systems, generative AI features, and emerging products.

CPU Expansion: In a notable development, Meta is rolling out Nvidia's first large-scale Grace CPU-only deployment—Arm-based processors designed for traditional data center workloads, not just AI acceleration. Meta plans to launch Vera CPU-only systems in 2027. This directly challenges Intel and Amd, which have dominated the x86 server market for decades.

Networking: Meta is scaling AI workloads with Nvidia's Spectrum-X Ethernet platform, supporting high-throughput, low-latency connectivity between GPU clusters.

Confidential Computing: Meta has adopted Nvidia's Confidential Computing capability specifically for processing private data in WhatsApp, enabling AI features while maintaining end-to-end encryption guarantees.

"We're excited to expand our partnership with Nvidia to build leading-edge clusters using their Vera Rubin platform to deliver personal superintelligence to everyone in the world," said Mark Zuckerberg, CEO of Meta.

The Numbers: Meta's AI Spending Machine

Meta's infrastructure investment has reached staggering levels. The company guided 2026 capital expenditures of $115-135 billion, nearly doubling from $69.7 billion in 2025 (Q4 2025 capex alone was $21.4 billion ). Full-year 2026 expenses are expected at $162-169 billion, with infrastructure costs—including third-party cloud spend, depreciation, and operating expenses—as the primary growth driver.

| Metric | Q1 2025 | Q2 2025 | Q3 2025 | Q4 2025 |

|---|---|---|---|---|

| Revenue ($B) | $42.3 | $47.5 | $51.2 | $59.9 |

| Capital Expenditure ($B) | $12.9* | $16.5 | $18.8 | $21.4 |

| Operating Income ($B) | $17.6 | $20.4 | $20.5 | $24.7 |

*Values retrieved from S&P Global

Meta CFO Susan Li emphasized the company's strategy of maintaining flexibility: "We're working to meet our silicon needs by deploying a variety of chips that optimally support each of our different workloads." In Q4 2025, Meta extended its Andromeda ads retrieval engine to run on Nvidia, AMD, and its proprietary MTIA chips, "nearly tripling Andromeda's compute efficiency."

For Nvidia, Meta represents one of its largest customers. Nvidia's data center business generated $57 billion in Q3 FY26 alone , growing 64% year-over-year with gross margins of 73.4% .

Why It Matters: The Google TPU Question

The deal arrives just three months after reports that Meta was in advanced talks to spend billions on Google's tensor processing units (TPUs) starting in 2027—a potential shift that briefly sent Nvidia shares tumbling 3%.

Google has been aggressively positioning its TPUs as a credible Nvidia alternative. Anthropic committed to accessing up to one million Google TPUs, and Google Cloud executives suggested the strategy could capture "as much as 10% of Nvidia's annual revenue."

Today's announcement doesn't preclude Meta from eventually adopting Google chips—Meta has emphasized "long-term flexibility" in its infrastructure strategy —but it signals that Nvidia remains the foundation of Meta's AI buildout for the foreseeable future.

"No one deploys AI at Meta's scale—integrating frontier research with industrial-scale infrastructure to power the world's largest personalization and recommendation systems for billions of users," said Jensen Huang, Nvidia CEO. "Through deep codesign across CPUs, GPUs, networking, and software, we are bringing the full Nvidia platform to Meta's researchers and engineers."

Both stocks have struggled in 2026 amid broader concerns about AI spending sustainability and whether GPU-intensive approaches will dominate long-term. Nvidia is down roughly 2% year-to-date after retreating from highs above $200.

Competitive Implications

For Intel and AMD: Nvidia's first large-scale Grace CPU deployment at Meta directly threatens Intel's and AMD's server CPU dominance. While the x86 duopoly still controls the vast majority of data center compute, Nvidia's Arm-based chips offer compelling performance-per-watt for specific workloads. Intel shares closed at $101.32, while AMD traded at $193.89.

For Custom Chip Developers: Amazon's Trainium, Google's TPUs, and Microsoft's Maia have all gained traction as hyperscalers seek to reduce Nvidia dependence. But Meta's expanded commitment suggests the "Nvidia tax" remains worth paying for companies requiring maximum flexibility and frontier performance. Nvidia has emphasized that its GPUs are "the only platform that runs every AI model and does it everywhere computing is done."

For Cloud Providers: Meta specifically mentioned it will deploy chips both in its own data centers and through "Nvidia Cloud Partners" like Coreweave and Crusoe. This hybrid approach reflects the growing importance of cloud GPU access for AI workloads.

What to Watch

Rubin Ramp (2027+): The deal's inclusion of next-generation Rubin chips provides visibility into Nvidia's product roadmap. Rubin rack-scale systems, including Vera CPUs, represent Nvidia's answer to growing custom ASIC competition.

Meta's MTIA Progress: Meta is expanding its proprietary chip program to support core ranking and recommendation training workloads in Q1 2026. The balance between internal chips and Nvidia procurement will shape Meta's infrastructure cost structure.

Google TPU Discussions: Meta hasn't closed the door on Google chips. Any announcement of TPU adoption would signal cracks in Nvidia's hyperscaler moat.

WhatsApp AI Features: Nvidia's Confidential Computing deployment for WhatsApp could enable new AI capabilities while preserving privacy—a potential growth vector Meta has been cultivating.

Related Companies: Meta Platforms · Nvidia · Intel · Amd · Alphabet · Coreweave