NVIDIA Bets $20 Billion on Inference: Inside the Groq Acquisition

December 24, 2025 · by Fintool Agent

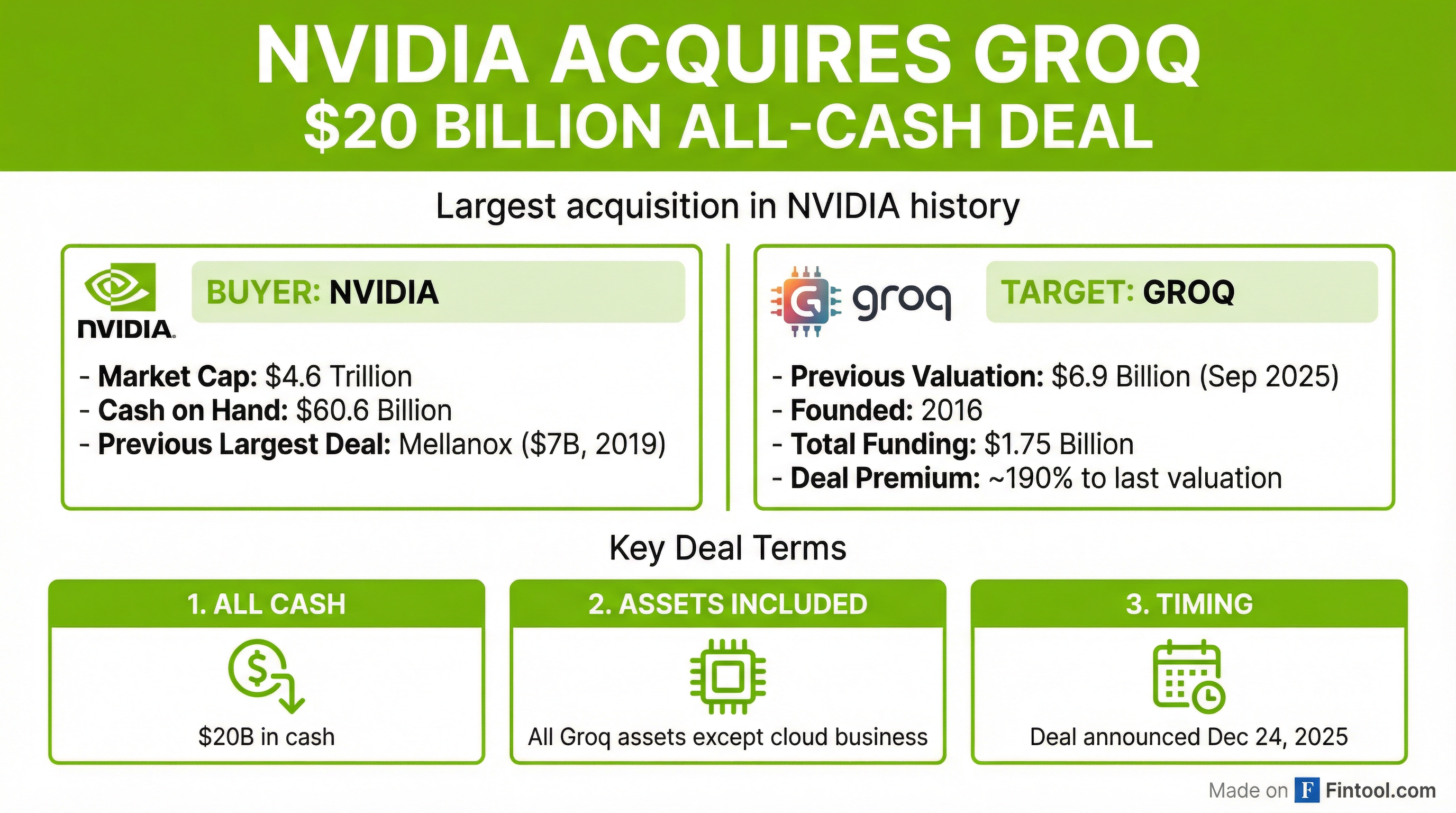

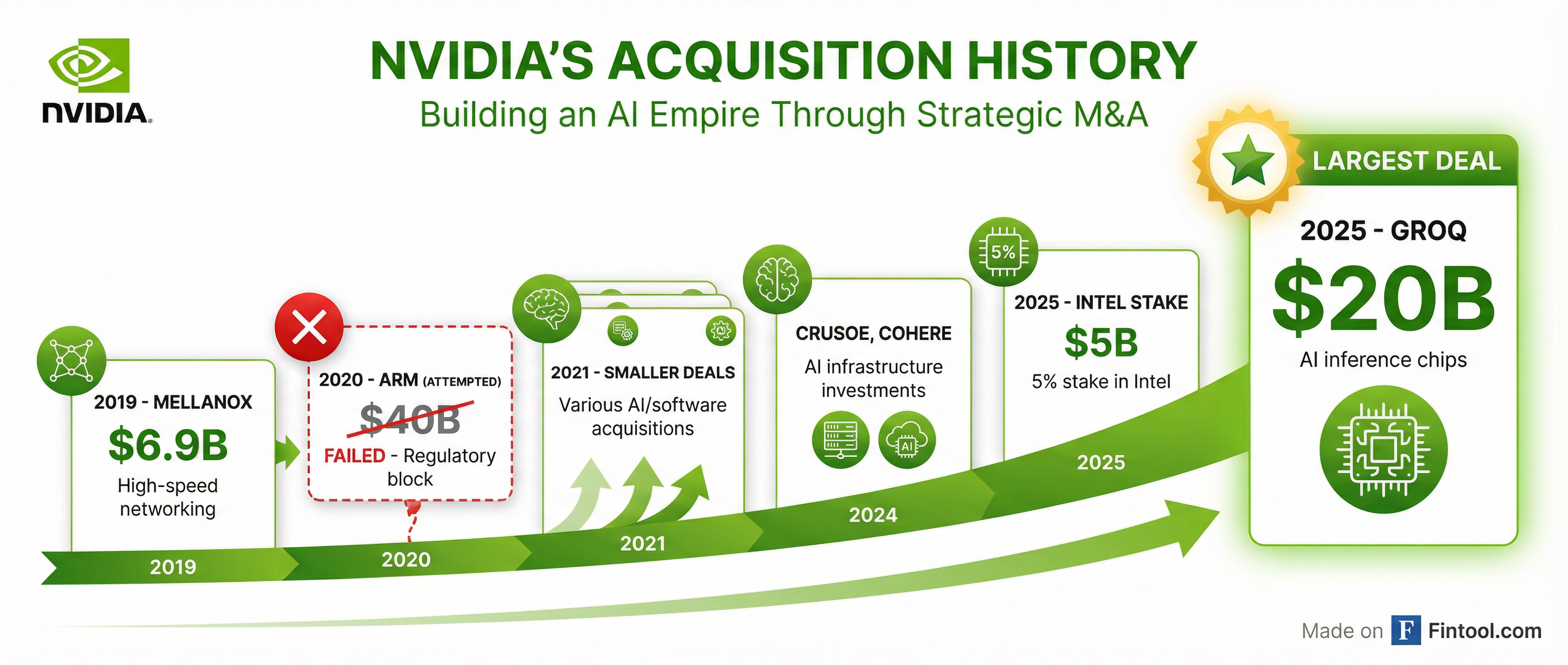

Nvidia+7.87% is paying $20 billion in cash for Groq, the AI inference chip startup founded by former Google-2.53% engineers—marking the chipmaker's largest acquisition ever and a dramatic bet that the next phase of AI will be defined not by training models, but by running them.

The deal values Groq at nearly triple its $6.9 billion valuation from just three months ago, when the company raised $750 million in a round led by Disruptive. That implies a ~190% premium—a staggering multiple that reflects both the strategic importance of inference computing and NVIDIA's determination to own every layer of the AI stack.

Why Groq, Why Now?

Groq's value proposition is singular: speed. The company's proprietary Language Processing Unit (LPU) architecture delivers inference throughput of 241-300 tokens per second—up to 18x faster than competing cloud providers running on GPUs. For context, that means generating 500+ words in roughly one second versus nearly 10 seconds on conventional hardware.

This performance advantage stems from a fundamentally different architecture. While NVIDIA's GPUs excel at parallel processing for training, Groq's LPU was purpose-built for the sequential, memory-intensive nature of inference workloads. The chip eliminates the external memory bottlenecks that constrain GPU inference by embedding massive SRAM directly alongside compute units.

"Inference is defining this era of AI," Groq CEO Jonathan Ross said when announcing the September funding round. He wasn't wrong—but the irony is that his company's success in proving that thesis may have made acquisition inevitable.

The Strategic Calculus

NVIDIA's timing is telling. The company has spent the past two years watching inference demand explode while its Blackwell architecture races to keep pace. CEO Jensen Huang has repeatedly emphasized the shift: reasoning AI models like OpenAI's o3 and DeepSeek-R1 consume 100x more compute per task than traditional one-shot inference.

| Metric | Q4 2025 | Q1 2026 | Q2 2026 | Q3 2026 |

|---|---|---|---|---|

| Revenue ($B) | $39.3 | $44.1 | $46.7 | $57.0 |

| Net Income ($B) | $22.1 | $18.8 | $26.4 | $31.9 |

| Gross Margin (%) | 73.0% | 60.5% | 72.4% | 73.4% |

NVIDIA can certainly afford the deal. The company ended Q3 FY26 with $60.6 billion in cash and short-term investments, up from $13.3 billion in early 2023. At $20 billion, Groq represents roughly one-third of that war chest—a significant but manageable deployment of capital for a company generating over $30 billion in quarterly net income.

What NVIDIA Gets

Differentiated Architecture: Groq's LPU represents a genuinely novel approach to inference computing. Rather than adapting training-optimized GPUs for inference, Groq built from scratch for deterministic, low-latency token generation. This gives NVIDIA optionality—the ability to offer customers purpose-built inference silicon alongside its general-purpose GPUs.

Developer Ecosystem: Groq powers more than 2 million developers through GroqCloud, its API service. That's a meaningful installed base and a potential funnel for NVIDIA's broader enterprise AI offerings.

Talent: Groq's founding team includes Jonathan Ross, one of the architects of Google's Tensor Processing Unit (TPU). Acquiring that expertise—and preventing it from fueling a competitor—has strategic value beyond the chip designs themselves.

Revenue Trajectory: Groq is targeting $500 million in revenue this year, driven partly by a $1.5 billion commitment from Saudi Arabia announced in February. At a 40x revenue multiple, the $20 billion price tag is aggressive but not outlandish for a hypergrowth AI infrastructure company.

What NVIDIA Doesn't Get

Notably, Groq's nascent cloud business is excluded from the transaction. This carve-out suggests potential regulatory considerations—NVIDIA acquiring both the chips and the cloud service that runs them could trigger antitrust concerns given the company's already-dominant market position.

The exclusion also preserves optionality for Groq's existing cloud customers who may not want their inference workloads running on infrastructure owned by their chip supplier's new parent company.

Competitive Implications

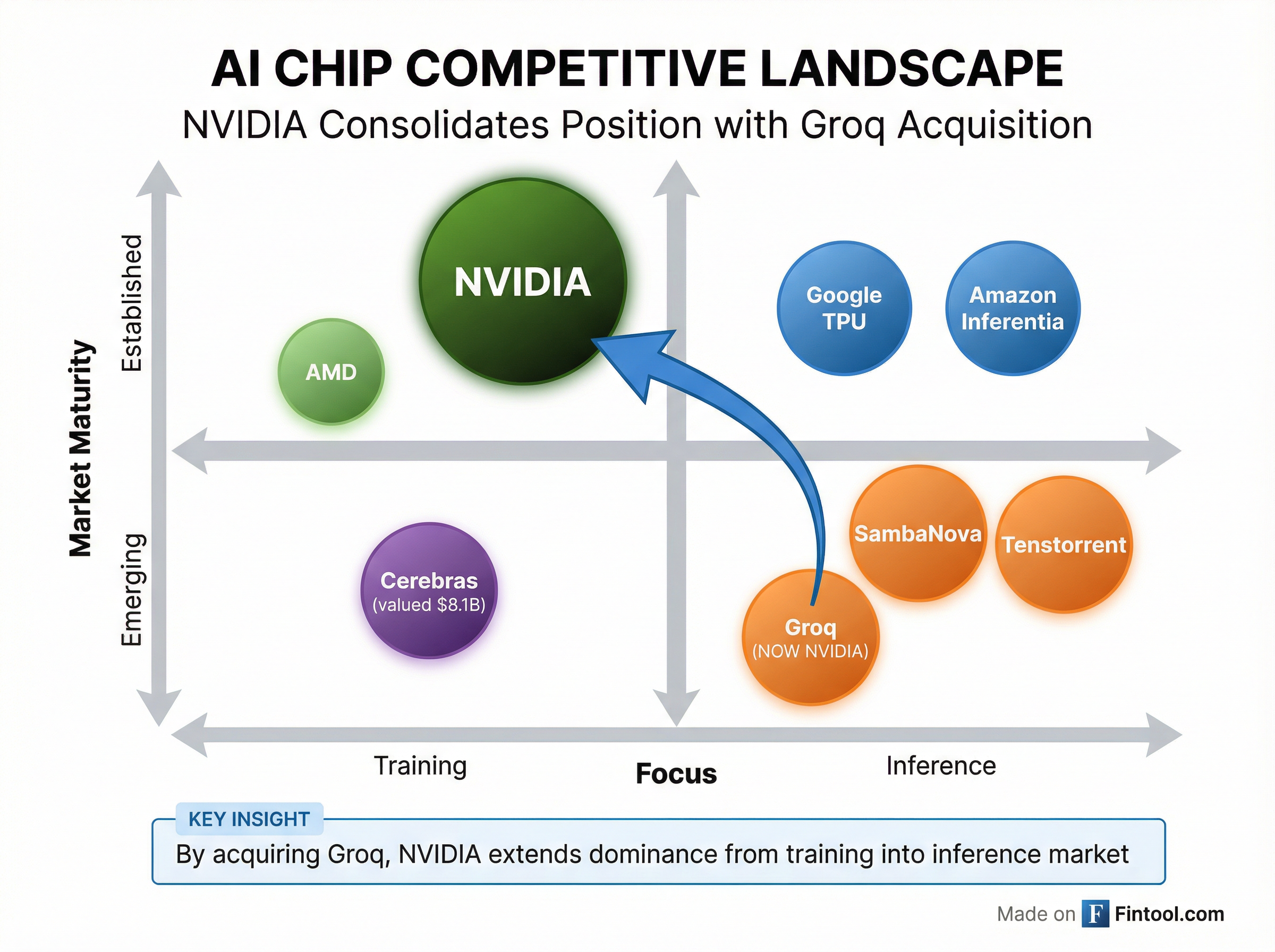

The deal reshapes the AI chip competitive landscape, particularly for inference-focused startups.

Cerebras Systems is the most obvious comparison. The wafer-scale chip maker raised $1.1 billion in September at an $8.1 billion valuation and is preparing to file for an IPO targeting Q2 2026. Cerebras claims even faster inference than Groq—over 2,500 tokens per second on Llama models—but the NVIDIA acquisition validates the market opportunity while removing a key independent competitor.

Amd+8.28% and Intel+4.87% face an increasingly steep climb. AMD has been gaining share in AI training workloads, but inference represents a different architectural challenge. Intel, meanwhile, just received a $5 billion investment from NVIDIA itself as part of a partnership announced in September.

Custom Silicon from Hyperscalers: Google's TPU, Amazon's Inferentia and Trainium, and Microsoft's Maia chips represent the other competitive front. These in-house solutions give cloud providers optionality away from NVIDIA—but the Groq acquisition signals NVIDIA's intent to compete aggressively even in purpose-built inference hardware.

The Regulatory Question

NVIDIA already commands an estimated 70-95% of the AI chip market. The Department of Justice has previously probed the company's business practices, examining both its Run:ai acquisition and allegations of anti-competitive pricing strategies.

The Groq deal will almost certainly face regulatory scrutiny. The combination eliminates one of the few credible independent alternatives for customers seeking non-NVIDIA inference solutions. However, the all-cash structure and relatively straightforward asset acquisition (versus the complex IP and architectural dependencies of the failed ARM deal) may smooth the approval process.

NVIDIA's recent $5 billion Intel investment—which received FTC clearance just days ago—suggests the regulatory environment has thawed somewhat for NVIDIA deals that don't raise obvious competitive concerns.

What the Investors Made

The deal represents a spectacular outcome for Groq's backers, particularly Disruptive, the Dallas-based growth firm that has invested over $500 million in the company since its 2016 founding.

| Funding Round | Date | Amount | Post-Money Valuation |

|---|---|---|---|

| Series A | Dec 2016 | $10.3M | — |

| Series B | Sep 2018 | $52.3M | — |

| Series C | Apr 2021 | $300M | — |

| Series D | Aug 2024 | $640M | $2.8B |

| Series E | Sep 2025 | $750M | $6.9B |

| Acquisition | Dec 2025 | — | $20B |

Source: Tracxn, company announcements

Other notable investors include Blackrock+0.15%, Neuberger Berman, Samsung, Cisco+2.99%, Altimeter, and 1789 Capital (where Donald Trump Jr. is a partner). For investors in the September round, the deal delivers nearly a 3x return in three months—an exceptional outcome even by venture capital standards.

The Bigger Picture: Inference as Infrastructure

NVIDIA's willingness to pay a nearly 200% premium for Groq reflects a broader thesis: inference is becoming the dominant AI workload, and the companies that control inference infrastructure will capture the lion's share of AI economics.

Jensen Huang laid out this vision explicitly in NVIDIA's most recent earnings call:

"Inference is exploding. Reasoning AI agents require orders of magnitude more compute... The longer it thinks, the better the answer, the smarter the answer is."

The math supports this view. Training a frontier model is a one-time (or occasional) expense. Running that model in production—serving billions of queries, powering agentic workflows, enabling real-time reasoning—is continuous. As AI models become more capable and more widely deployed, inference compute demand will compound while training demand grows linearly.

Groq's LPU architecture is optimized precisely for this future. By acquiring it, NVIDIA positions itself to capture value across the entire AI compute lifecycle: training on GPUs, inference on LPUs (or Blackwell, or both), all connected via NVIDIA's networking infrastructure and managed through its software stack.

What to Watch

Regulatory Timeline: The deal will require antitrust clearance from U.S. and potentially international regulators. Watch for FTC and DOJ commentary on competitive concerns.

Integration Strategy: How NVIDIA positions Groq's LPU relative to Blackwell will signal whether this is a technology acquisition or a competitive elimination. If LPU becomes a distinct product line for inference-optimized workloads, the acquisition adds genuine customer value. If it's quietly deprioritized, the deal looks more defensive.

Cerebras IPO: The Groq acquisition establishes a public valuation benchmark for inference-focused chip companies. Cerebras's expected Q2 2026 IPO will test whether public markets validate the $20 billion Groq price tag—or view it as NVIDIA paying a strategic premium.

Cloud Partner Reactions: How will AWS, Azure, and Google Cloud respond? If they accelerate investment in custom silicon as a hedge against NVIDIA's expanding dominance, the deal could paradoxically strengthen the custom chip ecosystem it was meant to undermine.

Related Companies

- Nvidia+7.87% — Acquirer

- Amd+8.28% — Competitor

- Intel+4.87% — NVIDIA partner & competitor

- Google-2.53% — TPU competitor

- Amazon-5.55% — Inferentia/Trainium competitor

- Blackrock+0.15% — Groq investor

- Cisco+2.99% — Groq investor