Musk's xAI Buys Third Building, Eyes 2 Gigawatts and 1 Million GPUs in Compute Arms Race

December 31, 2025 · by Fintool Agent

Elon Musk's xAI has acquired a third building to expand its Colossus supercomputer complex, pushing toward nearly 2 gigawatts of computing power in what's becoming the most aggressive AI infrastructure buildout in history. The announcement, made by Musk on X on December 30, signals xAI's determination to close the gap with—and potentially leapfrog—OpenAI, Google, and other AI leaders through sheer computational brute force.

"xAI has bought a third building called MACROHARDRR," Musk wrote, in what appears to be a playful jab at Microsoft+1.90% ($MSFT)—OpenAI's largest backer.

The Memphis AI Megacomplex

The new building is located in Southaven, Mississippi, adjacent to the Colossus 2 facility currently under construction. According to The Information, it sits near a natural gas power plant that xAI is also building in the area—a critical piece of the puzzle given that AI training at this scale consumes power equivalent to a small city.

xAI's infrastructure now spans three interconnected sites:

| Site | Location | Status | GPU Target | Power Capacity |

|---|---|---|---|---|

| Colossus 1 | South Memphis, TN | Operational | 230,000 GPUs | 250 MW |

| Colossus 2 | Whitehaven/Tulane Road, TN | Under construction | 555,000+ GPUs | 1 GW |

| MACROHARDRR | Southaven, MS | Just acquired | TBD | 500+ MW |

| Total | 1,000,000+ GPUs | ~2 GW |

The scale is staggering. Two gigawatts of power consumption equals approximately 40% of Memphis's average daily energy usage—enough to power 1.5 million homes.

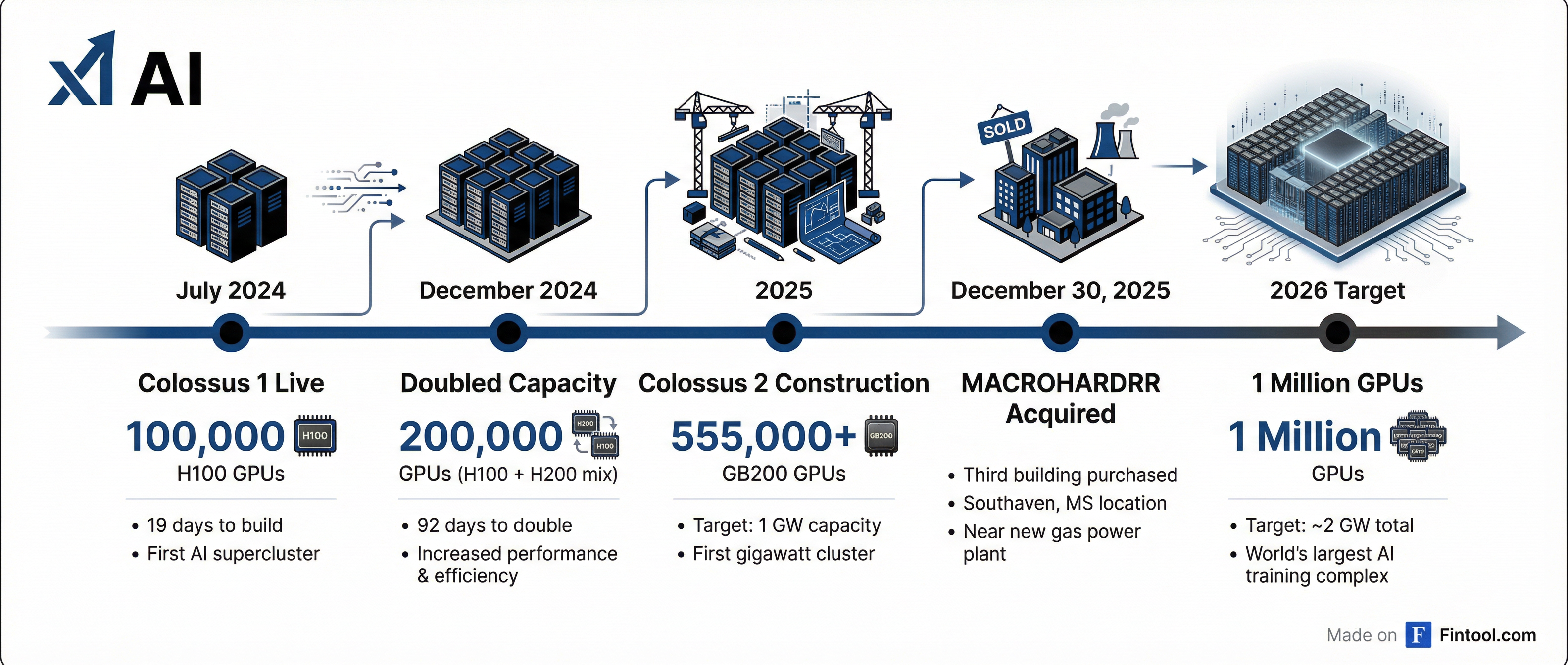

From 122 Days to World Domination

xAI's build velocity has shocked the industry. When Musk first announced plans for Colossus in 2024, experts said it would take 18-24 months to build. xAI did it in 122 days—and then doubled the GPU count to 200,000 in another 92 days.

The current configuration represents the world's largest AI training cluster:

- 150,000 NVIDIA H100 GPUs – The workhorse of the current AI boom

- 50,000 NVIDIA H200 GPUs – Higher memory bandwidth for larger models

- 30,000 NVIDIA GB200 GPUs – Next-generation Blackwell architecture

Total memory bandwidth exceeds 194 petabytes per second, with over 1 exabyte of storage capacity.

The speed of deployment is unprecedented. "We were told it would take 24 months to build," xAI states on its website. "So we took the project into our own hands, questioned everything, removed whatever was unnecessary, and accomplished our goal in four months."

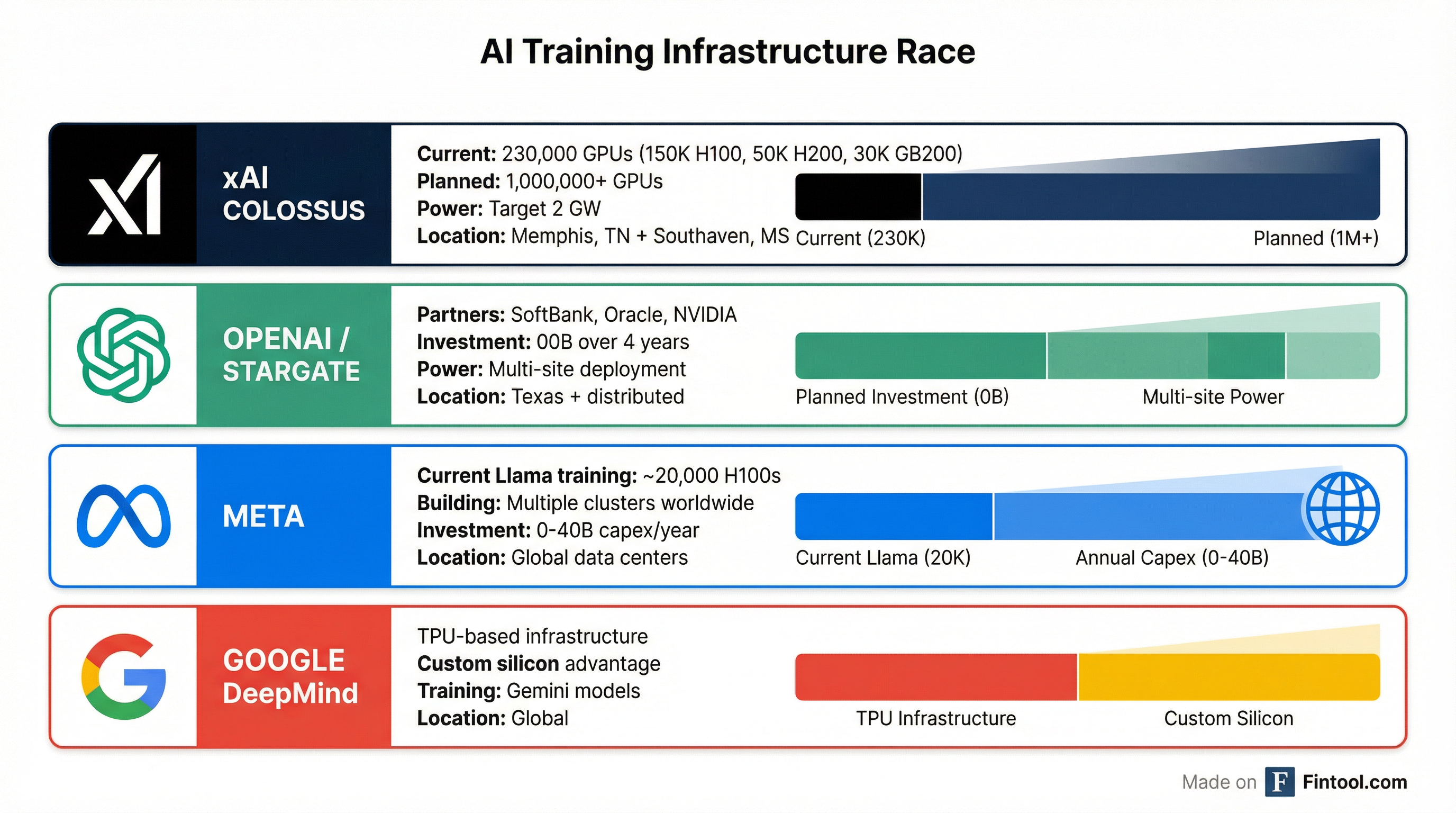

The AI Compute Arms Race

xAI's expansion comes as the battle for AI supremacy intensifies. Just yesterday, SoftBank completed its $40 billion investment in OpenAI, funding the Project Stargate data center initiative that aims to deploy up to $500 billion in AI infrastructure over four years. Yet even that massive commitment may be dwarfed by xAI's ambitions.

The compute comparison is stark:

| Company | Current AI Compute | Investment |

|---|---|---|

| xAI Colossus | 230,000 GPUs → 1M+ planned | Private (est. $10B+) |

| OpenAI/Stargate | Multi-site, distributed | $500B over 4 years |

| Meta | 20,000 H100 equivalent | $30-40B/year capex |

| DeepSeek | 50,000 H800s (est.) | $5.5M for V3 training |

What's notable about xAI's approach is the concentration. While OpenAI and Google distribute compute across multiple data centers and cloud regions, Musk is consolidating an unprecedented amount of GPU power into a single connected cluster in the Memphis area. This approach offers advantages for training massive AI models that require tight interconnection between GPUs, but creates enormous infrastructure challenges.

The Hardware Winners

The buildout is a bonanza for AI infrastructure suppliers:

Nvidia+7.87% ($NVDA) – The primary beneficiary. xAI's Colossus 2 alone will require over 555,000 GB200 GPUs at approximately $30,000-40,000 each, representing an $18 billion order before servers and infrastructure.

NVIDIA's recent financial performance reflects the AI infrastructure boom:

| Metric | Q4 2025 | Q1 2026 | Q2 2026 | Q3 2026 |

|---|---|---|---|---|

| Revenue | $39.3B | $44.1B | $46.7B | $57.0B |

| Net Income | $22.1B | $18.8B | $26.4B | $31.9B |

| EBITDA Margin | 62.5%* | 50.5%* | 62.3%* | 64.5%* |

*Values from S&P Global

Dell Technologies+4.91% ($DELL) – Reportedly in advanced talks to supply over $5 billion in AI servers containing NVIDIA's GB200 GPUs. Dell has been a key partner since the original Colossus build.

Super Micro Computer+11.44% ($SMCI) – Supermicro helped build the original 100,000 GPU Colossus cluster and sponsored an in-depth documentation of the facility. The company's liquid-cooled server racks are optimized for the extreme density required in AI training.

Tesla+3.50% ($TSLA) – Tesla Megapack batteries provide 150 MW of backup power for the Colossus complex, ensuring the cluster can ride through outages.

Environmental Backlash

The rapid expansion has drawn fierce criticism from environmental groups and Memphis residents. The Southern Environmental Law Center (SELC) has accused xAI of operating gas turbines without proper permits and polluting the air in South Memphis—an area that already has some of the worst air quality in the region.

Key concerns include:

- Air Quality: Memphis was designated an "asthma capital" by the Asthma and Allergy Foundation of America in 2024

- Water Usage: The complex may consume up to one million gallons of water daily for cooling

- Power Source: Temporary reliance on gas turbines while grid connections are built

To address water concerns, xAI announced an $80 million wastewater treatment facility that will recycle approximately 13 million liters of wastewater per day.

"Allowing a business to pollute the air with NOx and formaldehyde for nearly one year with no permit or regulatory oversight is egregious," said LaTricea Adams of Young, Gifted, and Green, a local environmental advocacy group.

What Powers Grok

All of this infrastructure serves one primary purpose: training xAI's Grok AI models. Grok 3, released in February 2025, was trained on the expanded 200,000 GPU cluster and was developed with roughly 10 times more computing power than its predecessor Grok 2.

Musk has positioned Grok as a direct competitor to OpenAI's ChatGPT, Anthropic's Claude, and Google's Gemini—but with fewer content restrictions. The model powers AI features across Musk's other companies:

- X (formerly Twitter): Grok integration for Premium+ subscribers

- Tesla: Potential applications in Full Self-Driving and Optimus robot

- SpaceX: Computing support for various operations

xAI recently launched Grok Business and Grok Enterprise tiers, signaling a push into the commercial AI market dominated by OpenAI and Microsoft.

The Bigger Picture

xAI's expansion represents more than just a data center purchase—it's a bet that raw compute will continue to be the primary driver of AI capability improvements. While some researchers argue that algorithmic innovations and data quality matter more than scale (see: DeepSeek's efficient training approach), Musk is clearly in the "more GPUs, better AI" camp.

The Memphis complex also reflects the geographic reshaping of America's tech landscape. Once confined to Silicon Valley, the AI industry's infrastructure is now sprouting in Tennessee, Mississippi, Texas, and other states offering cheap power, available land, and friendly regulatory environments.

For investors, the key questions are:

- Can xAI monetize this compute? The infrastructure costs are astronomical—potentially exceeding $30 billion when fully built out

- Will Grok catch up to ChatGPT? OpenAI's first-mover advantage and Microsoft distribution remain formidable

- How do suppliers benefit? NVIDIA, Dell, and Supermicro are clear winners regardless of which AI company ultimately dominates

As Musk wrote in his announcement, xAI now has "the ability to keep pushing the frontier of AI." Whether that frontier leads to profit or just more power consumption remains to be seen.

Related